Google is on a quest to give AI a body, and in doing so, might also do the reverse i.e. figure the perfect brain for every robot out there, writes Satyen K. Bordoloi

I remember when Google was just another scrappy startup, rejected by Yahoo and struggling to find its footing. No one could have predicted that this little company would go on to reshape our entire digital universe, pioneering page ranking, navigation, translation, and eventually the planet-altering transformers paper that gave birth to generative AI. Google has been the main author of our digital lives and existence. And now, not content with being masters of the digital domain, they’re setting their sights on something even more ambitious – giving AI a physical form.

AI today is like a mind trapped in a hermetically sealed jar: it can process text, images, and audio and even understand physical spaces, but it cannot interact with or alter the world around it. It’s like a brain without hands, eyes without the ability to pick what it sees, intelligence that can comprehend everything but change nothing. That is exactly the ambitious ‘problem’ Google DeepMind wants to solve with its latest breakthrough: “two new AI models based on Gemini 2.0 which lay the foundation for a new generation of helpful robots.” The goal is simple yet audacious: bring AI into the physical world.

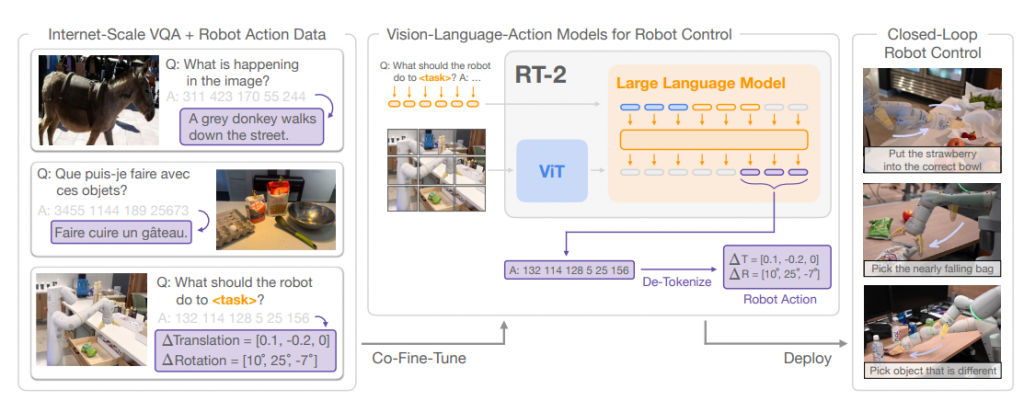

Vision Language Action Models: A Communication Revolution: Remember the term Vision Language Action Models (VLAs) because, I suspect, you’ll hear a lot about it. Just like the idea of ‘transformers’ that quietly revolutionised AI by birthing Generative AI, VLAs, first introduced in a 2023 Google paper, could be the next game-changing concept in robotics.

Traditionally, Generative AI and voice-command systems first transcribe speech into text and use LLM to understand it. Lost in so much translation are nuances, context, and the beautiful complexity of human communication. VLAs flip this entire approach by directly trying to understand speech. This means capturing those changes in intonation, the voice modulations that add layers of meaning beyond mere words.

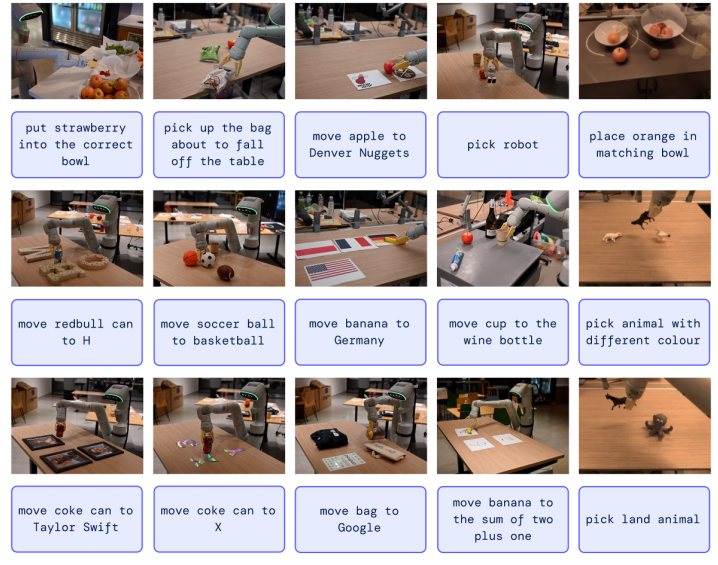

This enables VLAs to be multimodal magicians that can process data across text, image, and speech inputs, ultimately aligning them to complete a given task. Research has shown that VLAs significantly outperform traditional speech recognition systems, especially in complex, customised tasks for robots.

Google’s Robotic Pioneers: On March 12, Google unveiled two robots that operate on VLA principles.

- Gemini Robotics: An advanced VLA built on the Gemini 2.0 LLM, with a twist – it adds physical action as a new output mode to control robots directly.

- Gemini Robotics-ER (Embodied Reasoning): Another Gemini model with advanced spatial understanding that takes robotics to the next level.

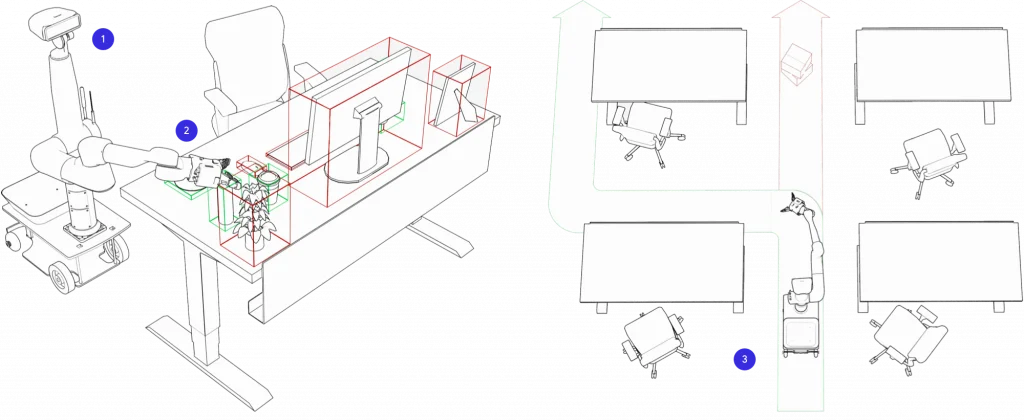

And Google’s done thinking small. While these models might resemble robotic arms you’ve seen in industrial settings or Iron Man movies, they’re also “partnering with Apptronik to build the next generation of humanoid robots with Gemini 2.0.”

These two models aren’t just programmed toys but learning machines with an intuitive-sounding capability to understand and adapt to the physical world around them. Let me explain with an example. Imagine this robot in a kitchen encountering a spatula for the first time. Instead of freezing or calling a human for help (that’ll be something), it connects visual information about the spatula’s shape with its understanding of cooking tools, which is part of its LLM. Suddenly, it knows exactly what to do. No complex coding, no manual required. Just adaptive intelligence.

This “generalisation, adaptability, and dexterity” hints at a future where robots aren’t just clunky factory machines doing one specific task like a mechanistic Prometheus but intelligent, helpful assistants. It could tidy up your home, help your dad cook dinner, remind your grandmother to take her meds, and even run through a fire to rescue your cat.

Google’s Deeper Mission: In the days of PageRank, Google aimed to ‘organise the world’s information’ and make it universally accessible and useful. It’s done that for a quarter of a century. Now, it seems they’re foraying into the physical realm with robotics. The truth, though, is that this robotics foray is also part of the same mission. The physical world is full of information that’s not neatly digitised or searchable. Consider the subtle nuances of human language and interaction, the way objects feel and behave, or how things change in the physical domain every moment, every day.

These complex human behaviours and intricate spatial dynamics defy simple categorisation. By giving AI a physical presence, Google can unlock this uncharted territory of real-world data.

Robots can also learn by doing, by interacting with their environments, and by observing human behaviour. This “learning by doing” approach could be the real key to creating truly intelligent, adaptable AI – potentially transforming our physical world as dramatically as Google transformed the digital landscape.

The Winding Path: Google’s Robotics Journey: Far from being a straight line, Google’s journey into robotics has been a winding road filled with exciting discoveries, unexpected challenges, and multiple project closures. A significant milestone was the “Everyday Robots” Project within Google’s “X” (now “X Development LLC”), which aimed to create general-purpose robots capable of learning and adapting to everyday tasks.

The vision, when it started, was impossibly utopian: robots that could seamlessly integrate into our human environments and perform both our mundane and exciting chores with ease. While the project ultimately concluded, it taught the company some invaluable lessons about teaching robots through simulation, reinforcement learning, and imitation —i.e., figuring out how to show robots how tasks are performed so they can learn from it, much like humans do. These approaches led to VLAs.

The Challenges Ahead: The two Google robots might seem impressive, but they’re just the first landmark of a future overpopulated with robots, tiny and big, cheap and expensive, created by giant corporations and garage tinkerers… all of whom would have one stated aim: to help us work and navigate our physical world much like what AI does for us in the digital world.

The first hurdle: the mind-boggling complexity of human conversation. Humans (occasionally animals), are masters of nonverbal cues, subtle gestures, and implicit understandings. We can communicate volumes without saying a word. Deciphering this intricate dance between what is said and what is understood, what’s seen in gesture and what’s not said despite verbosity of words, is beyond the problem of better algorithm and training. Yet, VLAs are a great starting point in this journey.

Then there’s the impossible dexterity and manipulation of every human’s (and most animals) nimble actions. We never think about it, but a simple task, such as picking up a cup or opening a door, actually requires incredibly precise movements, motor skills and coordination. Developing robots with comparable dexterity is a humongous engineering challenge we’ve been grappling with for decades.

Then there’s every AI doomsayer’s favourite topic: safety. Robots need to operate around humans without causing harm. These plastic and metal machines must be able to handle unexpected situations without causing damage to property or injury to flesh and bone creatures, humans and our pets alike. Ensuring this would require robust safety mechanisms and intelligent decision-making capabilities.

Philosophical and Ethical Frontiers: Finally, let us never forget that this ‘giving AI a body’ – beyond engineering challenges and safety concerns, is both a philosophical and ethical nightmare. The questions that emerge from it are never-ending. What does this mean for the fundamental concepts of intelligence and consciousness? Will having a physical presence fundamentally alter the very nature of AI? Will the marriage of AI and robotics give birth to something more than just sophisticated machines?

And let’s not forget the societal implications of robots with AI brains. A bodyless AI has led to massive unemployment. AI, with a body, portends societal implications that are so unfathomable that it could upend not just our jobs and relationships but the very fabric of society, leisure, money, jobs, family, etc.

Google’s first motto was “Don’t be evil,” which later evolved to “Do the right thing.” Can the company follow either approach and ensure that these powerful machines are used for good rather than for harm? What about their use in warfare? Wouldn’t that be evil and not ‘the right thing’? Establishing ethical guidelines and safety protocols for plastic and metal robots would be crucial for navigating the future of robotics responsibly.

We’ve barely begun coming to terms with our digital transformation. Now, we find ourselves stranded in a realm where intelligent machines might fold our laundry, cook our meals, and potentially understand us better than our partners and spouses. This quest is, hence, a fundamental shift in our relationship with technology and with each other. The journey from lines of code to walking, thinking machines has just begun. And if our digital history is any indication, we’re in for one hell of a ride.

In case you missed:

- Forget Smart Homes – Welcome to Your ‘Feeling’ Home

- The Cheaper Than Laptop Robot Revolution: How China’s Unitree Just Redefined Our Future

- Your Phone is About to Get a Brain Transplant: How Google’s Tiny, Silent Model Changes Everything

- Gemini & Copilot accessing your content: A great data grab in the name of AI assist?

- OpenAI, Google, Microsoft: Why are AI Giants Suddenly Giving India Free AI Subscriptions?

- AI’s Looming Catastrophe: Why Even Its Creators Can’t Control What They’re Building

- AI’s Top-Secret Mission: Solving Humanity’s Biggest Problems While We Argue About Apocalypse

- 75 Years of the Turing Test: Why It Still Matters for AI, and Why We Desperately Need One for Ourselves

- The B2B AI Revolution: How Enterprise AI Startups Make Money While Consumer AI Grabs Headlines

- 100% Success Rate: Johns Hopkins AI Surgeon Does What Humans Can’t Guarantee