If a restaurant chain loses $100 million because AI bots instigated humans, that era is of manufactured outrage – where the loudest voices in the room aren’t even human, writes Satyen K. Bordoloi

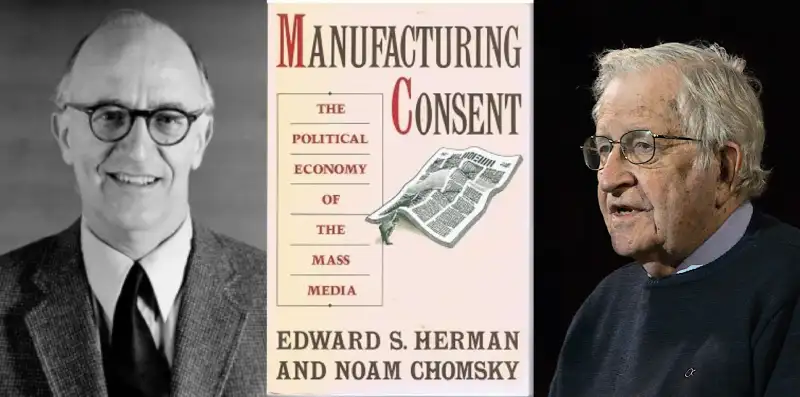

Many sayings warn us against falsehoods: A lie travels the world multiple times over before the truth even has the chance to wear shoes, or that a lie repeated enough becomes the truth. Turns out, these two are at the centre of a global network of what Edward S. Herman and Noam Chomsky called Manufacturing Consent, except now it is done by brewing outrage in an algorithm. Till a few years ago, they were done with bots on social media; now the bots have got an Artificial Intelligence boost.

On August 19, Cracker Barrel, the Tennessee-based restaurant chain known for its country-style comfort food and rustic décor, unveiled a “refreshed” logo. Twitterati exploded with outrage, memes, and calls to boycott. Conservative commentators named it a culture-war issue. In two days, Cracker Barrel’s stock had plummeted, wiping out nearly $100 million in market value. The company, rattled by what seemed overwhelming public backlash, reversed course, scrapped the new logo, shelved renovation plans, and the “Old Timer” returned.

The only problem with all that: very little of that outrage was real because PeakMetrics, a Los Angeles-based narrative intelligence platform that monitors online discussions and threats, found that 44.5% of X posts about Cracker Barrel on August 20 showed signs of bot-like activity. When specifically examining boycott-related posts, that number jumped to 49%. Over the entire controversy – from August 19 to September 5, encompassing more than two million posts – 24% carried signs of automation.

Israeli disinformation detection firm Cyabra, which analyses fake profiles and coordinated campaigns across social media platforms, conducted its own investigation to find that 21% of profiles discussing Cracker Barrel’s logo change were fake accounts orchestrating a coordinated disinformation campaign. These inauthentic profiles generated content that reached over 4.4 million potential views.

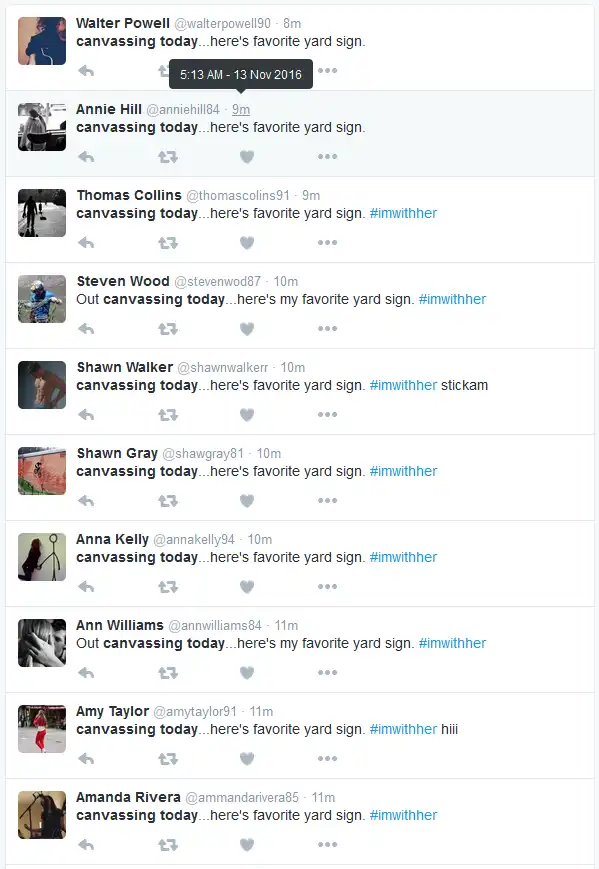

PeakMetrics noted something particularly telling: most of the bot accounts used identical wording in posts. The bot’s posts fell into two main categories – critiques of Cracker Barrel for going “woke” and abandoning its heritage, and calls for leadership change, a boycott, or a logo reversal. While the adverse reaction started with real people, particularly right-leaning accounts, bots then amplified the message exponentially.

Manufacturing Outrage at Scale

Cracker Barrel isn’t alone. Amazon and McDonald’s faced similar orchestrated attacks in 2025 after rolling back their diversity, equity, and inclusion (DEI) initiatives. According to Cyabra, nearly 30% of social media posts advocating for boycotts of these companies were generated by AI bots. The campaign involved fake news websites that appeared on Facebook, reporting on the supposed McDonald’s boycott.

When examining over 5,000 profiles discussing McDonald’s between June 19 and June 26, Cyabra found that 32% of these accounts were inauthentic. For Amazon, the numbers were even more alarming: 35% of 3,000 accounts discussing the company from the beginning of the year to June 20 were fake, and a targeted review from June 1 to 24 found that 55% of accounts discussing Amazon were not real.

This doesn’t just seem like corporate manipulation, but has the stench of an industrial-scale problem. Research from the University of Notre Dame found that AI bots easily bypass social media safeguards on eight major platforms: LinkedIn, Mastodon, Reddit, TikTok, X, Facebook, Instagram, and Threads. Researchers successfully published benign “test” posts from bots on every single platform they examined.

The 2025 Imperva Bad Bot Report reveals that automated bot traffic surpassed human-generated traffic for the first time in a decade, constituting 51% of all web traffic in 2024. Malicious bots alone now account for 37% of all internet traffic – a significant increase from 32% in 2023. This marks the sixth consecutive year of growth in harmful bot activity.

The AI Advantage:

What makes today’s bot campaigns particularly insidious is how generative AI has lowered the barrier to entry. As the Atlantic Council’s Digital Forensic Research team notes, modern political bots are characterised by three core features: activity, amplification, and anonymity. But AI has supercharged all three.

Research published in PNAS Nexus by scholars, including Joshua A. Goldstein, demonstrated that GPT-3-generated propaganda was nearly as persuasive as content sourced from real-world foreign covert propaganda campaigns. While only 24.4% of respondents who weren’t shown an article agreed with a thesis statement, that rate jumped to 43.5% among those who read GPT-3-generated propaganda – a 19.1 percentage-point increase. The original propaganda performed only slightly better, at 47.4%.

Perhaps most concerning, a study examining a Russian-backed propaganda outlet found that adopting generative AI tools increased the productivity of the state-affiliated influence operation while expanding the breadth of content without reducing the persuasiveness of individual publications. The research, published in a peer-reviewed journal, showed that AI tools enabled the outlet to generate larger quantities of disinformation and expand the scope of their campaign at multiple levels of production.

The technology has also given rise to “Bots-as-a-Service” (BaaS) – a commercialised ecosystem where malicious actors can rent bot networks without technical expertise. According to cybersecurity experts, the BaaS market has grown substantially, with bad actors leveraging AI to scrutinise their unsuccessful attempts and refine techniques to evade security measures with heightened efficiency.

Manufacturing Consent, 2.0

In their seminal 1988 work Manufacturing Consent: The Political Economy of the Mass Media, Edward S. Herman and Noam Chomsky argued that mass media function as a system-supportive propaganda tool through market forces, internalised assumptions, and self-censorship. They identified five filters through which news passes before reaching the public: ownership, advertising, sourcing, flak, and anti-communism (later updated to “fear”).

Today’s AI-powered bot networks constitute a sixth filter – one that operates not by controlling what information reaches the public, but by creating and artificially amplifying certain kinds of narratives to drown out others. The real problem isn’t just that AI is creating fake content to influence people – but it is the ease of amplification of a particular narrative by which AI bots create fake engagement to both real and fake content, which leads social media AI algorithms to amply them to real users who again get fooled into thinking that the outrage is real because of the high engagement when it may not be so.

This is a vicious cycle: bots generate fake likes, retweets, and shares. Social media algorithms interpret this as genuine engagement and promote it to real users. Some real users then engage with it, validating the algorithm’s decision to amplify the artificially boosted narrative further. It becomes a self-fulfilling prophecy as fake engagement, before you can stop it, leads to real engagement.

The Detection Problem

Fighting back against AI-powered bot networks isn’t easy. The fundamental problem is one of scale and sophistication. Deepfake detection systems have shown impressive accuracy rates – one study achieved 95.56% for image data, 98.5% for audio, and 97.574% for video – but they require substantial computational resources, making them impractical for you and me and even computing applications – all this while bots become better at fooling us with human-like activity.

Hence, in the wake of incidents like those involving Cracker Barrel, executives should consume social media outrage with a pinch of scepticism. It could be real, or it could just be manufactured. Because we aren’t living in the age of manufactured consent, we’re actually automating it.

For the rest of us, though, this bodes of terrible times. Any decision, no matter how horrible, can ride the coattails of social media outrage manufactured by bad actors and end up harming real people. The question then is what kinds of tools, regulations and critical thinking will we develop to resist a time when the loudest voice in any conversation isn’t even human?

In this brave new AI world, the old adage needs an addition: The lie travels with an army of bots to amplify itself thousands of times, until manufactured outrage becomes indistinguishable from the real thing, even before the truth can wear its shoes.

In case you missed:

- Australia Tells Teens To Get Off Social Media; World Watches This Prohibition Experiment

- Greatest irony of the AI age: Humans being increasingly hired to clean AI slop

- AI Browser or Trojan Horse: A Deep-Dive Into the New Browser Wars

- Free Speech or Free-for-All? How Grok Taught Elon Musk That Absolute Power Corrupts Absolutely

- Digital Siachen: How India’s Cyber Warriors Thwarted Pakistan’s 1.5 Million Cyber Onslaught

- Forget Smart Homes – Welcome to Your ‘Feeling’ Home

- The Verification Apocalypse: How Google’s Nano Banana is Rendering Our Identity Systems Obsolete

- Black Mirror: Is AI Sexually abusing people, including minors? What’s the Truth

- 75 Years of the Turing Test: Why It Still Matters for AI, and Why We Desperately Need One for Ourselves

- The Digital Yes-Man: When AI Enabler Becomes Your Enemy