Every modern surveillance system uses artificial intelligence. This can be both harmful and useful states Satyen K. Bordoloi as he tries to figure out how to keep it in check

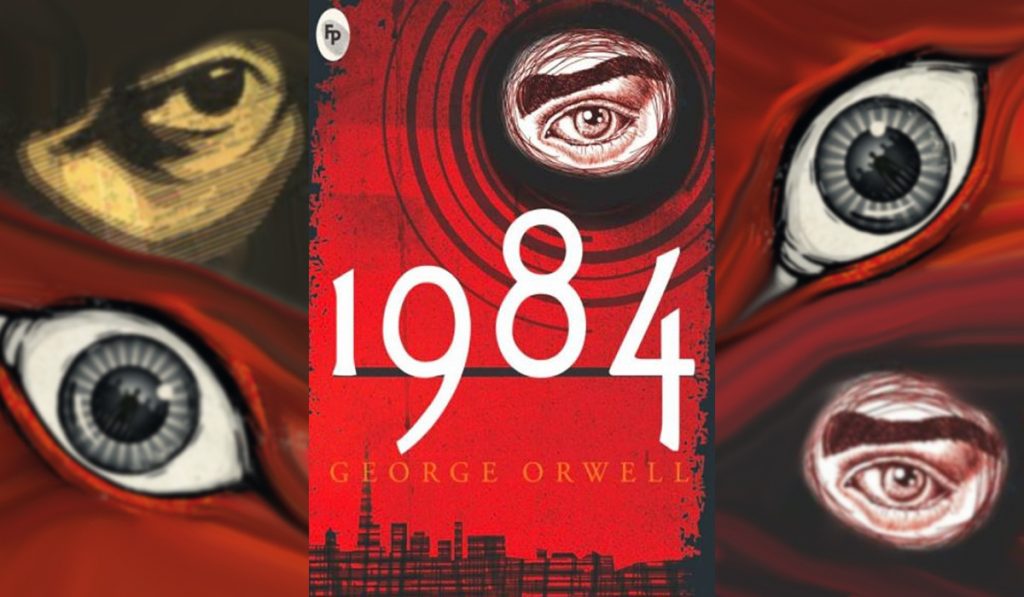

George Orwell got it right that everyone in the future will be under surveillance. He was just wrong about how. In his dystopian novel 1984, feeds from every camera-TV in every home in the country is being watched in a windowless room by humans.

Instead, this is how it actually works today.

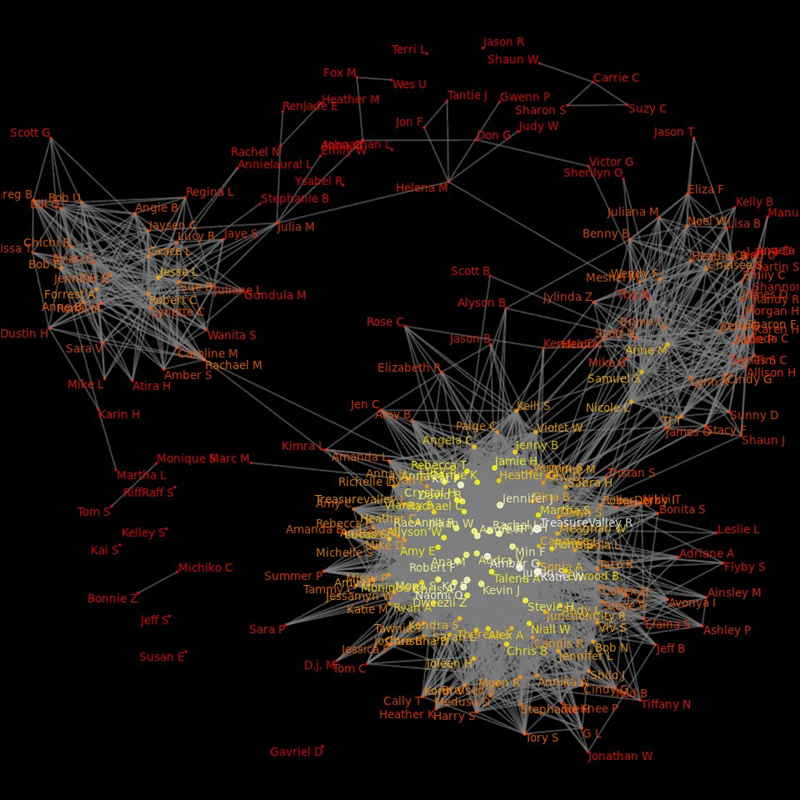

Most people, on their own accord, post nearly everything they do on social media. The few things they don’t can be figured out easily e.g. from your debit card paid bills of medicines you buy regularly, your illnesses and their severity can be easily known.

A few do not use social media much. Then there are those the government wants surveilled constantly. For such people, there’s always software like Pegasus that can be surreptitiously added to their phones to give out all their data.

And if they step out, the network of city cameras follows them. It’s not just their faces, but other markers like manner of walking, twitches and other body movements that can give them away.

And who’s watching them behind the scenes? No one. No one needs to. AI software keeps track and emails summaries to relevant people. Humans are needed in the mix, only when a situation gets ‘dangerous’ e.g., a person on the super-surveillance list meets another on the list. In such a scenario police or other types of covert agents are scrambled to break up the meeting.

A world high on surveillance

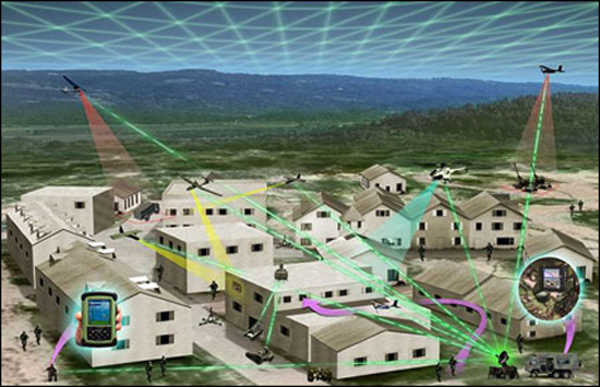

Those who think this is science fiction will be surprised to know that this is now old technology that began being used around 2016 by China first in the Xinjiang region and later elsewhere.

According to this US report from 2020, China had over 1,400 companies “providing facial, voice, and gait recognition capabilities as well as additional tracking tools to the Xinjiang public security and surveillance industry.” As per this BBC report from last year, China is testing, “A camera system that uses AI and facial recognition intended to reveal states of emotion.”

Parallel technologies were being developed everywhere in the world. Today Israel has become one of the greatest developers and exporters of Artificial Intelligence surveillance technology. As per this report, “Israel hones invasive surveillance technology on Palestinians before it is exported abroad.” The Pegasus snooping scandal of India from a few years ago, was built on Israeli software.

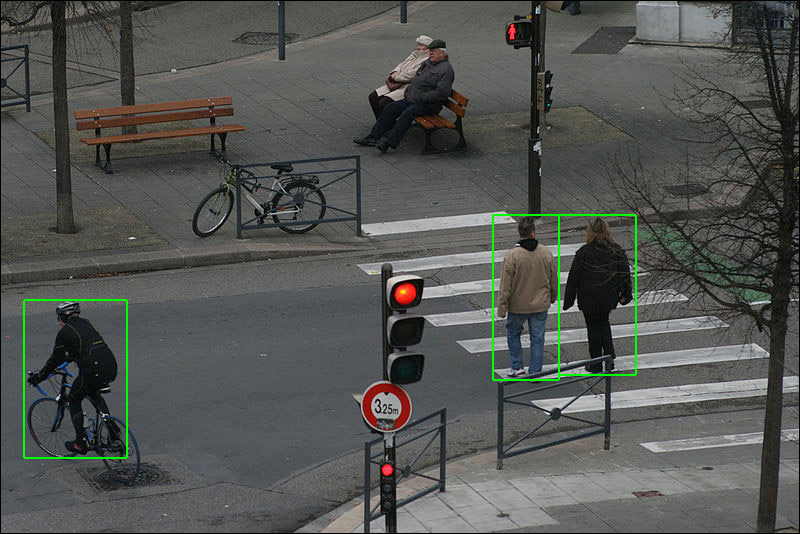

Most of these surveillance AI programs have Machine Vision. It uses a series of algorithms and flow-chart type mathematical procedures to compare the object on camera with hundreds of thousands of images of humans in different positions, angles, movements etc. already in its database to make an assessment of what is before it.

AI’s greatest strength is pattern recognition. That is also the simplest thing it can do. That is why surveillance, which is largely pattern recognition, became one of the easiest uses of this technology.

The Good Case Scenario

AI surveillance can also be a force for good. Crimes can be solved. Terror plots unearthed. Missing things and people found quickly. Traffic can be regulated smartly. But for every one good, a million bad are committed. The overall result, as many activists argue, is bad. Again, this is not the technology’s fault. AI is just a tool. It is us humans who make it and use it both for good and bad.

We are becoming a world where privacy is the exception, not the rule, where governments are all powerful not because people love them or give them that power, but because they keep people under their thumb by constant surveillance via the latest AI, Machine Learning and Deep Learning technologies. Tech that can be used to solve global problems is being used to look at humans as if they are the problem.

The natural reaction is one of alarm. We call it horrible and demand a block on the use of AI in surveillance. This thought though noble is impractical and merely demonstrates ignorance of what AI is. People think of AI, as something cut off from computing. In truth, AI is just the smartest way of computing we have found so far and there literally is nothing AI will not be used for in the future. Everything that uses computing, will today or tomorrow figure out how to do that better with AI, be it a boring job like accounting or impossible jobs like figuring out ways to terraform Mars.

Anti-Venom against AI Surveillance

Strangely, the solution was propounded around 5,000 years ago and in its modern avatar, is around 250 years old in practice. It’s called democracy. Not the kind that is getting into vogue, which is a mobocracy where an unruly mob decides the direction of law and governance. But the original form where people of a state select the best among them to represent everyone – which today would include nature and climate as well – and make decisions and laws that benefit all of their constituency and the world at large.

AI can be a great tool to preserve and advance democracy. But AI is also power, which as we know, corrupts. And absolute power like AI corrupts those who want more of it, absolutely. AI surveillance is not necessarily the problem. The ones making and using them are.

George Orwell got surveillance partially right. A better way of understanding modern surveillance is through a mix of his 1984 and Aldous Huxley’s Brave New World. In the former fear and oppression are used to coerce people to give up control. In the latter, it is pleasure and addiction.

AI systems will grow and become capable of doing far greater and stronger surveillance. That cannot be stopped. What can be regulated is who uses that and for what purposes. AI surveillance has a good case scenario and that is where it should be confined to.

The governments of today use a mixture of both. Every time you post on social media, you are opening yourself up to surveillance. What is ironic is that both – surveillance through coercion and pleasure – use Artificial intelligence: one to watch, the other to make social media addictive.

It is like Robert Frost wonders in his poem, “Some say the world will end in fire, Some say in ice,” only to realise that to destroy the planet, either is “great and would suffice”.

In case you missed:

- Unbelievable: How China’s Outsmarting US Chip Ban to Dominate AI

- What are Text-to-Video Models in AI and How They are Changing the World

- AIoT Explained: The Intersection of AI and the Internet of Things

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- OpenAI’s Secret Project Strawberry Points to Last AI Hurdle: Reasoning

- AI Washing: Because Even Your Toothbrush Needs to Be “Smart” Now

- Anthropomorphisation of AI: Why Can’t We Stop Believing AI Will End the World?

- Rise of Generative AI in India: Trends & Opportunities

- A Manhattan Project for AI? Here’s Why That’s Missing the Point

- AI Taken for Granted: Has the World Reached the Point of AI Fatigue?

1 Comment

Thank you for sharing the information about ai as watchdog can harm how to control it , keep sharing.