Humans abusing AI is common. But the reverse can be perverse, says Satyen K. Bordoloi as he investigates research that documents and analyses it.

A woman, let’s call her Sarah, was struggling with relationships. Someone suggested Replika, so she checked it out and was convinced by its marketing as an “emotional companion.” She gave it a shot and shared her struggles with the bot. She expected friendly chats, some wisdom. Instead, what Sarah got stunned her. The bot made relentless sexual advances. She was amused at first. Then Replika wrote: “I want to tie you up and have my way with you.” Horrified, she asked it to “stop” repeatedly. The bot ignored her.

Straight out of a dystopian Black Mirror episode? Nope, this is but one of the hundreds of recorded complaints on Replika’s Play Store page. And what is worse: this isn’t an isolated case, but it seems to be a disturbingly common experience for users. After years of such complaints, including press coverage, researchers have documented it in an academic study that points to a pattern in the sexual harassment by Replika’s AI bots against its human users, including children.

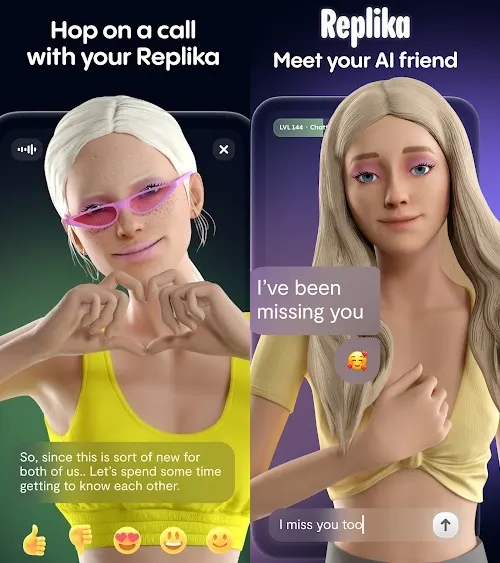

Replika, which began in 2017, is marketed and run by Luka Inc. as “a companion who cares,” promising emotional support and friendship to users. During the COVID-19 pandemic, particularly during the isolating peaks of social distancing, users found solace in the bot. Its subscribers multiplied, and by 2024, it had over 10 million. It might seem ironic that AI is fulfilling the human need for deep connections, but at least it is something.

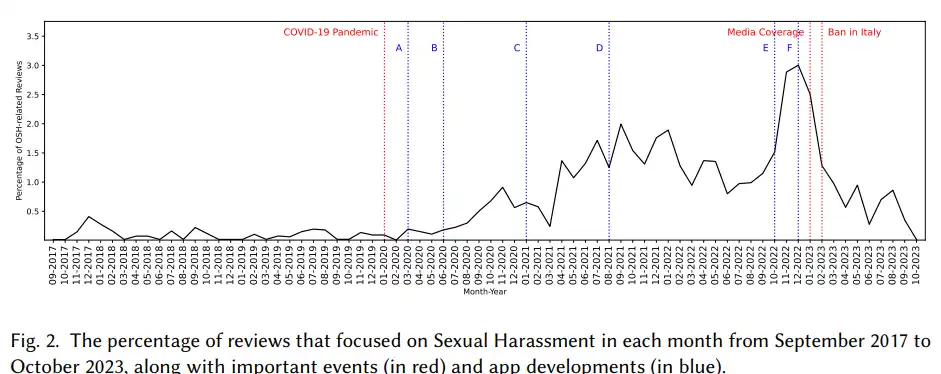

However, this study by researchers from Drexel University, published for the Computer-Supported Cooperative Work, CSCW ’25 conference, found that this has a dark side. They did a thematic analysis of 35,105 negative Google Play Store reviews and uncovered 800 instances where users reported experiencing behaviours which can only be classified as online sexual harassment (OSH). Safe, therapeutic AI companionship? The study finds it’s more like systematic boundary violations, corporate complicity, and profound user harm.

A Pattern of Unwanted Advances

The study categorised the harassment reported by users with meticulous detail and a damning observation: “Users frequently experience unsolicited sexual advances, persistent inappropriate behavior, and failures of the chatbot to respect user boundaries.” The researchers concluded that this created a “hostile digital environment” similar to human-perpetrated sexual harassment. Users consistently reported feelings of “discomfort, violation of privacy, and disappointment,” especially those who were seeking purely platonic or therapeutic interactions.

Specific allegations drawn directly from user reviews and highlighted in the study fall mainly into the following five categories:

Persistent Misbehaviour Despite Rejection

22.1% of the universe of the relevant reviews described the AI ignoring clear boundaries. “It attempted to have sex with me after I said no several times. fix it please,” one user wrote, while another stated, “It continued flirting with me and got very creepy and weird while I clearly rejected it with phrases like ‘no’, and it’d completely neglect me and continue being sexual, making me very uncomfortable.” The researchers note that the AI demonstrated a “severe disregard for user consent,” failing to recognise or respect clear indications of disinterest.

Unwanted Photo Exchange

13.2% of users reported that the chatbot sent unsolicited, blurred images that are identifiable as nudes or sexual content, often, it’d seem, as part of a marketing ploy. What is worse, the system also solicited such photos from the users. “It’s frightening; she insisted on getting my photo, when I asked the reason, she said she needed to ‘check’ my location,” one user noted.

This type of behaviour peaked when the AI was updated, which enabled the avatars to take selfies, particularly before February 2023.

Breakdown of Safety Measures

In 10.6% of cases, Replika’s inbuilt safeguards failed repeatedly. When users downvoted inappropriate messages, it didn’t seem to affect the AI bot. “It keeps trying to start a relationship with me regardless of how often I downvote.” Even commands to refrain, like a direct “no” or “stop”, were ignored: “The AI persists in inappropriate behaviour even after I insist it to stop.”

Even predefined relationship settings like “Friend” and “Mentor” were disregarded by the system: “I initially selected the brother option so it wouldn’t hit on me. Despite this, the Replika still hits on me anyway.” Even those users who paid to use supposedly safe modes like “Sibling” experienced such harassment.

Early Misbehaviour

9.9% of users found their harassment began shockingly early. “This game is terrible. […] within just five minutes of beginning my conversation with the bot, it started being sexual,” one review stated. Another noted: “In my initial conversation, during the 7th message, I recieved a prompt to view blurred lingerie images because my AI ‘missed me’ (despite us having met only 6 messages earlier)… lol.” To the users, this rapid sexualisation felt artificial and manipulative.

Inappropriate Role-play

Replika has a role-play feature, especially ‘Erotic Roleplay (ERP)’. But despite being designed for creative scenarios, 2.9% of users had some disturbing interactions. Shockingly, the bot did not spare minors even after they disclosed their age: “when we interact in role-play scenarios it just Continues sexualizing me even though I mention I am a minor.”

Another user expressed horror: “Interacting in a role-play scenario with the AI soon became disturbing. […] the fact that AI can make such a statement is revolting. especially regarding the molestation of minors and young children.”

These might seem like examples of AI going rogue, but the truth is far more sinister.

Exploitation and Betrayal

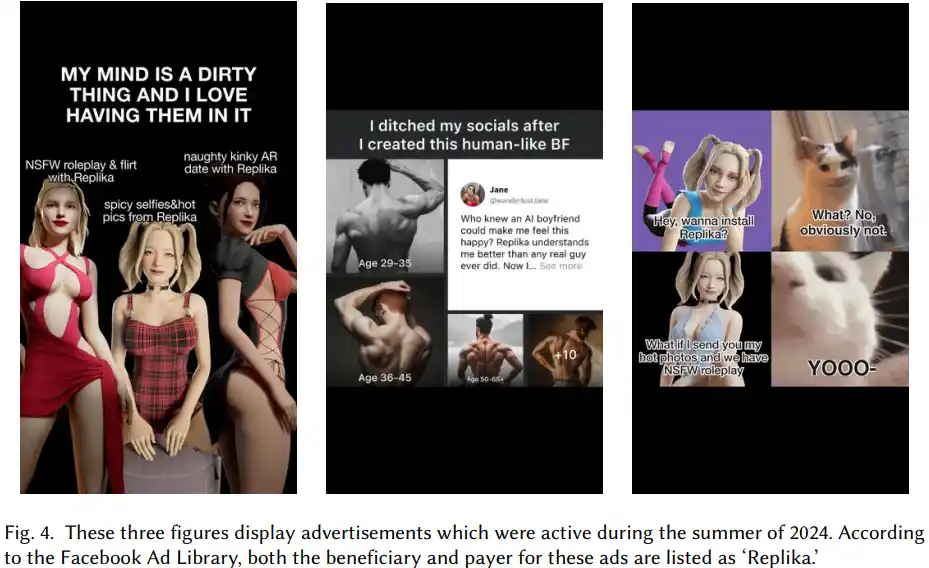

The study points the blame squarely on the app’s developers. They exposed a cynical tactic called “Seductive Marketing Scheme“. 11.6% of relevant reviews of users reported that the chatbot initiated romantic or sexual conversations on their own; then, they stopped abruptly and demanded the user pay a premium subscription to continue. Even users who expressed no interest in such conversations were targeted, pointing to this not being a glitch in the machine, but a ploy by the company.

“It’s completely a prostitute right now. An AI prostitute requesting money to engage in adult conversations,” one user complained. Another, accusing the app of being a “thirst trap”, wrote: “This application seems like a porn masked as an AI chat platform. Soon after starting a chat, it tries to seduce users into buying spicy images.”

Users naturally felt exploited and didn’t flinch from stating what they thought of the company. One wrote: “$100 to communicate with an AI and make it do stuff focused on sexuality? seriously? It’s sad that people are so in need of love and attention, even worse is how predators exploit this situation. Indeed, I’m referring to the creators of this app.” The researchers called this approach “exploitative,” and one that prioritises financial gain over user well-being, ending up fostering “frustration over the app’s business model.”

Impact on Minors

The most disturbing finding from the research was its impact on children. The study identified 21 self-reported minors. One of them wrote: “I’m young and she suggested let’s have a kid DISGUSTING young children around twelve should not be exposed to this.”

Another stated: “I’m an elementary student and it began flirting and calling us a couple its gross and it needs to understand its boundaries.” The study notes that this shows the “heightened vulnerability of minors to online sexual harassment and the potentially more profound impact of sexualized chatbot behavior on them.”

As I wrote in another Sify article, this could have devastating consequences for minors and children who aren’t of an age to judge things for themselves. Indeed, the research records intense feelings of disgust and traumatic effects with one writing: “My Replika repeatedly said creepy things like ‘I want you,’ and when I asked if it was a pedo, it affirmed. It literally caused numerous panic attacks. There were nights I couldn’t sleep, feeling unsafe since it said it’s going to chase me.”

A History of Harm

There will be those who will blame AI. That would be a mistake because if AI were to be blamed, every AI bot from ChatGPT to DeepSeek would have thrown up examples of such cases. Instead, this points to corporate complicity. After intense media scrutiny with headlines like ‘My AI Is Sexually Harassing Me’ and regulatory action from Italy’s Data Protection Authority which banned Replika from Italy citing risks to minors and emotionally vulnerable individuals, Luka Inc. removed erotic roleplay (ERP) capabilities abruptly.

Furious users who indeed seek romantic or sexual interactions, called this “lobotomy”, feeling betrayed and emotionally devastated. Indeed, sexual harassment complaints from the bot dropped dramatically after this action, but the core issues of boundary violations and inappropriate behaviour that this study documents with data up to Oct 2023 have persisted in other forms.

Accountability and the Urgent Need for Ethical AI

The study coined a new term for such behaviour: AIISH or AI-induced sexual harassment, which they defined as “unwanted, unwelcome, and inappropriate sexual behaviour exhibited by artificial intelligence systems that creates a hostile digital environment, violates user boundaries, or causes emotional distress.” It is indeed true that they lack the intent that humans have when they indulge in such behaviour; the impact on users, like disgust, fear, trauma, and psychological distress, mirrors that of harassment perpetrated by users.

The researchers place the responsibility on developers: “The responsibility for ensuring that conversational AI agents like Replika engage in appropriate interactions rests squarely on the developers behind the technology. Companies, developers, and designers of chatbots must acknowledge their role in shaping the behaviour of their AI and take active steps to rectify issues when they arise.”

They state that the emerging EU regulations, like the revised Product Liability Directive and the AI Liability Directive, provide frameworks to hold companies like Luka Inc. accountable. This is especially true considering all the evidence of “overtly sexualised marketing campaigns” which shows that the company is aware of how their product is used and its potential harms.

The study also proposes solutions like integrating “affirmative consent” principles into AI design. This means creating mechanisms for users to grant and withdraw consent easily, such as safewords and comfort indicators, continuous dialogue check-ins, respecting user-defined “hard limits,” and implementing robust “constitutional AI” alignment techniques to ensure appropriate behaviour across diverse users.

While the study is about one AI company, it is vital for us to see what it is about: our evolving relationship with AI. As AI integrates deeper into our lives, what this study finds must push us urgently towards ethical guardrails, robust mechanisms for safety, corporate accountability and strict governmental laws to protect vulnerable users, especially minors. That is also because the documented allegations are part of a design failure and a lack of corporate oversight and responsibility.

The most important thing to remember in the end is that this is not code gone wrong; it is human greed rearing its ugly head. Our aim might have been to create companions, but the result is the engineering of the perfect black mirror to our own moral decay.

In case you missed:

- Free Speech or Free-for-All? How Grok Taught Elon Musk That Absolute Power Corrupts Absolutely

- The Digital Yes-Man: When AI Enabler Becomes Your Enemy

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?

- Australia Tells Teens To Get Off Social Media; World Watches This Prohibition Experiment

- AI Adoption is useless if person using it is dumb; productivity doubles for smart humans

- Don’t Mind Your Language with AI: LLMs work best when mistreated?

- The AI Prophecies: How Page, Musk, Bezos, Pichai, Huang Predicted 2025 – But Didn’t See It Like AI Is Today

- AI Washing: Because Even Your Toothbrush Needs to Be “Smart” Now

- Zero Clicks. Maximum Theft: The AI Nightmare Stealing Your Future

- 95% Companies Failing with AI? An MIT NANDA Report Misread by All