A theory about human intelligence formulated over four decades ago could provide the way for artificial general intelligence, writes Satyen K. Bordoloi

Every technological leap births its own mythology: visions so dazzling it blurs the divide between reality and fantasy. Nuclear power promised electricity “too cheap to meter.” Electric vehicles would run on batteries that could run coast-to-mountain without a recharge. The Internet was to democratize knowledge. And the most audacious techno myth is in the field of AI, that of Artificial General Intelligence, or AGI – a hypothetical superintelligence so vast and godlike that not only would it outthink every AI ever built, it’d eclipse the collective intellect of humanity itself.

The reality? Nearly 2 years ago, Sam Altman asked for over $7 trillion to build AI infrastructure along with AGI. This price tag exceeds the US federal budget, is twice the UK’s GDP, and is 1.7 times the GDP of India.

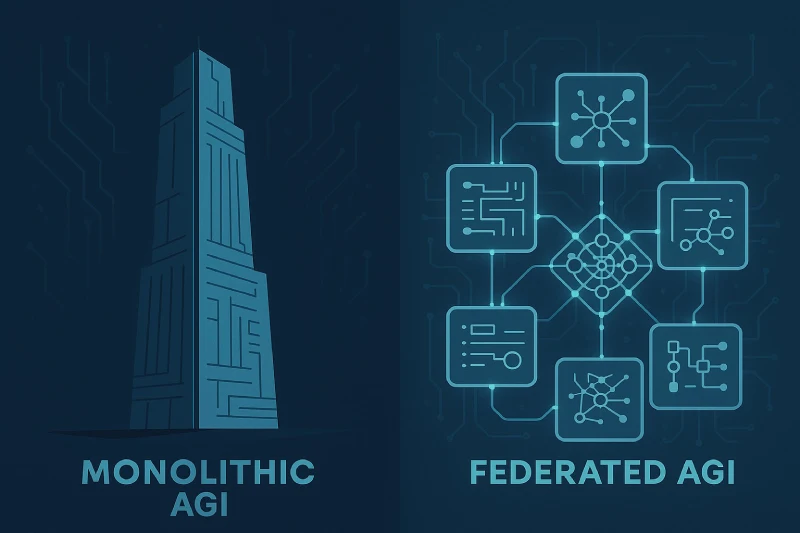

Some in AI are more realistic. For them, AGI is simply an AI that can perform any intellectual task a human can, one that can match or exceed human cognitive abilities across any task, possess human-level versatility, and so on: basically, a machine functionally equivalent to human intelligence. That seems achievable. But maybe a singular, monumental algorithm that can do all this is the wrong way to approach it.

Perhaps it’ll help to look at the problem backwards: instead of building one intelligence, perhaps we should mimic biology and construct a sort of council of brains, a federation of specialised intelligences that, when integrated, gives rise to the emergent property of general intelligence.

MULTIPLE INTELLIGENCES

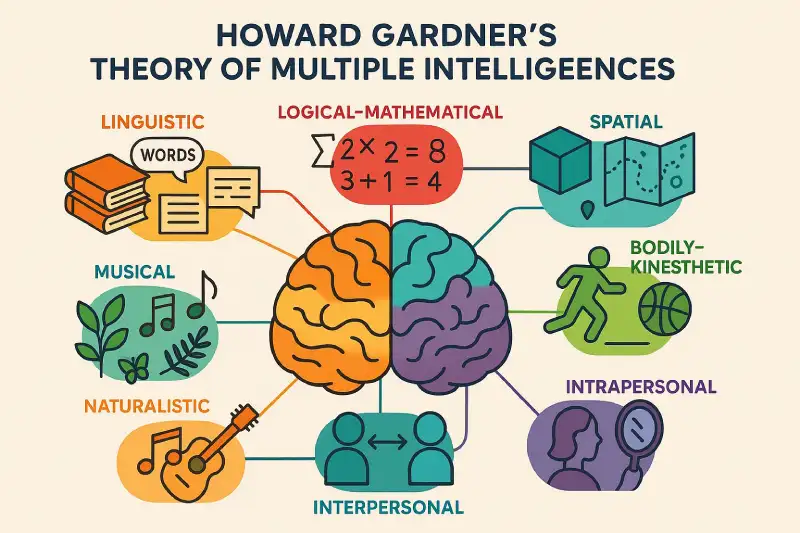

The inspiration for this idea comes from Howard Gardner’s Theory of Multiple Intelligences. First introduced in his 1983 book Frames of Mind, it revolutionised how we think about human intelligence. Instead of viewing it as a single, measurable IQ score, he proposed that individuals possess a diverse set of cognitive abilities, each being its own kind of ‘smart’.

He proposed eight types of intelligences: linguistic, logical-mathematical, spatial, bodily-kinesthetic, musical, interpersonal, intrapersonal, and naturalistic. He later proposed other ‘candidate’ intelligences: existential and moral, but left the principal eight intact.

Perhaps the application of this model to AI development will take us away from the quest for a solitary AGI and towards engineering a collaborative system of multiple AI systems, each of which is a master of its domain and whose integration would create a whole far greater than the sum of its parts.

CORE PRINCIPLE OF MULTIPLE AIs

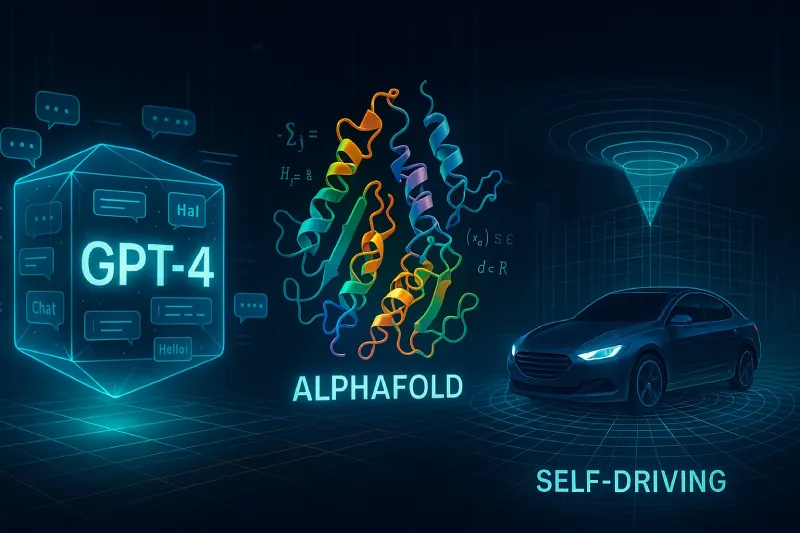

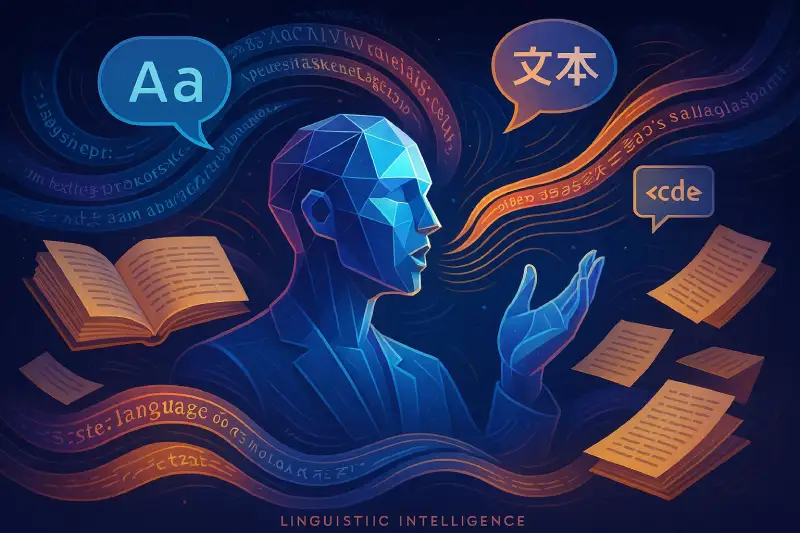

If you look at all the AI systems, you’ll find that, in a way, the world of AI already, unwittingly, is proving the validity of this approach, where not one, but thousands of AI models are working, each an expert in its own domain. Thus, a large language model like GPT-4 aces linguistic tasks: conversation, translation, writing, thus demonstrating a form of Linguistic Intelligence.

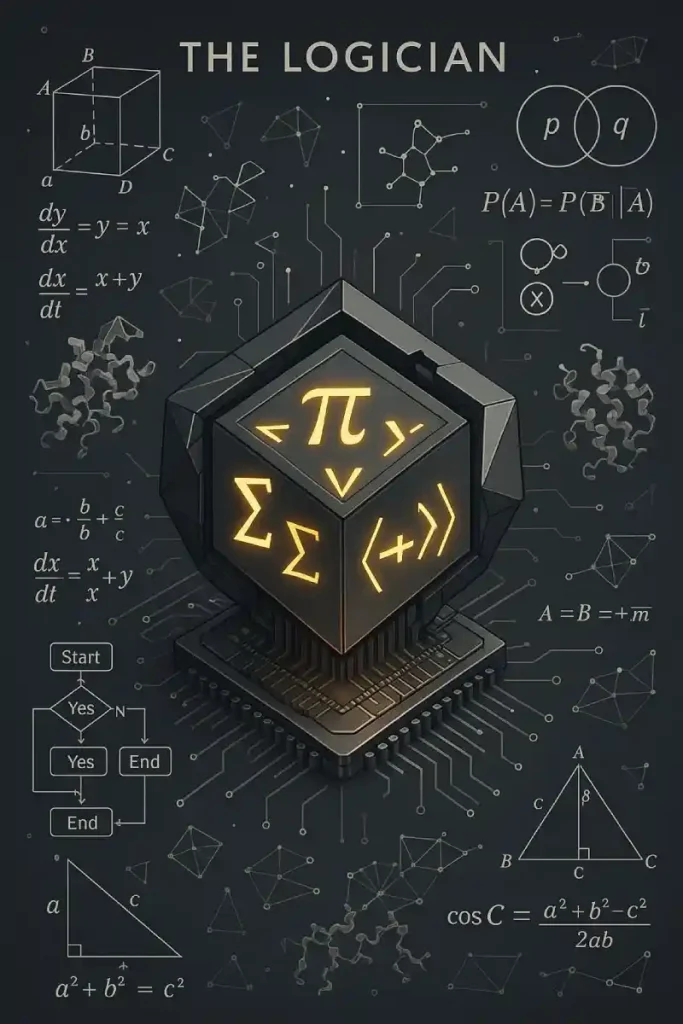

On the other hand, there’s DeepMind’s AlphaFold, which has predicted protein structure with astonishing accuracy while operating on Logical-Mathematical Intelligence. And the AI systems on self-driving cars, equipped with Lidar and Radar, are the perfect example of Spatial Intelligence.

On its own, these are just advanced AI systems excelling in their own domain, savants incredibly powerful within their narrow focus but utterly incapable of stepping outside of it. E.g. most LLMs have been shown to be bad at even basic maths concepts, or even counting. Thus, an LLM can only blabber out what’s in its database about physical space without understanding it like the AI in a self-driving car can, while a protein-folding AI can’t chat with you.

Thus, the path to AGI may not be about making the LLM better at folding proteins or having an LLM drive your car. It is about building a meta-cognitive framework that has these different AI systems, which, when confronted with a problem, can identify the appropriate specialised AI “expert” from its toolkit, synthesise the results, and apply them to a novel situation. This is the essence of Gardner’s theory applied to engineering: general intelligence emerges from the orchestration of specific intelligences, just as he said that human intelligence emerges.

THE AGI COUNCIL

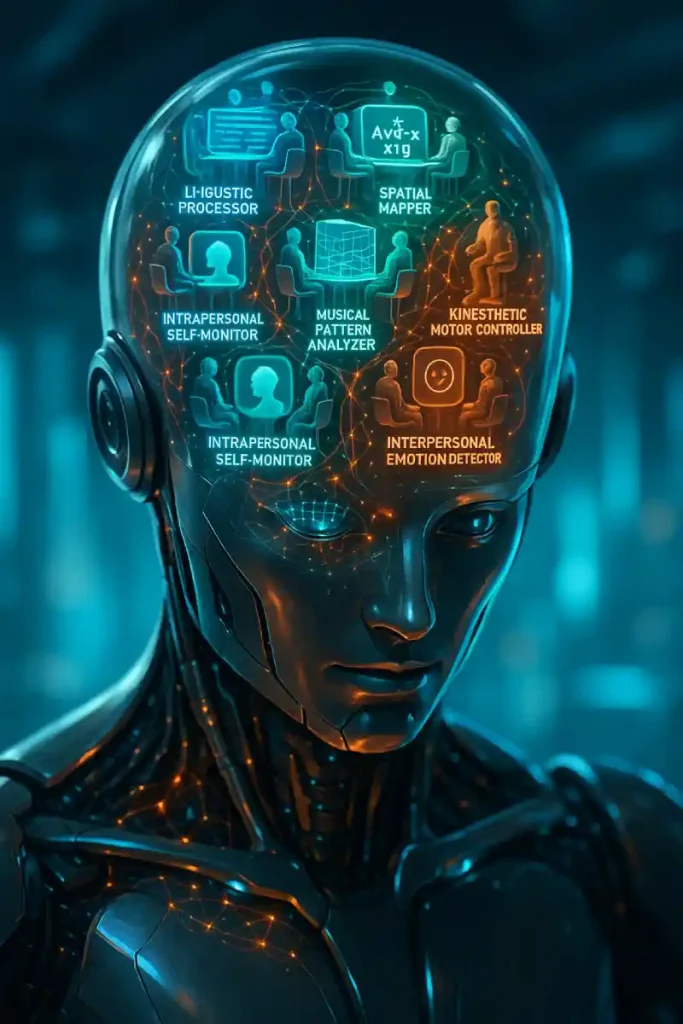

Let us apply this formulation to do a thought exercise. Imagine a solitary robot with not one but at least 8 of these different types of AI systems with eight different intelligences as per Gardner’s core ideas, along with corresponding sensors like Radar, Lidar and cameras that help them have these intelligences, along with, of course, their usual AI chips.

In this council of eight inside the robot brain, the ‘consciousness’ of the AGI is the process that happens when linking all of these systems together to moderate and integrate different intelligences and inputs/outputs to reach a final decision about a particular problem after listening to all these different voices.

The first module is Linguistic AI, the orator. This is the advanced LLM, trained on human language, whose role in the AGI council is to handle all communication: parsing user input, generating human-readable output, writing code, and summarising complex data from other modules into coherent narratives. It is the voice and ears of the system.

The second, Logical-Mathematical AI, is the logician, the master of logic, abstraction, and calculation with symbolic reasoning engines, theorem provers, and systems like AlphaFold. Its role is to handle structured problem-solving, algorithmic execution, data analysis, and deductive reasoning. When the AGI needs to calculate probabilities, solve a complex equation, or work through a logical puzzle, this is the system that is delegated the task.

The third would be Spatial AI, or the navigator, which understands the physical world in visual and geometric terms thanks to its advanced computer vision systems, 3D modelling engines, Lidar and Radar inputs, etc. This allows the AGI to interpret visual data and navigate environments – whether virtual or physical via robotics, manipulate objects in space, and reason about geometry and perspective.

Fourth is the Bodily-Kinesthetic AI, or the Artisan, as the control system for fine and gross motor skills to translate high-level goals (“pick up that delicate glass”) into precise, coordinated physical actions, while managing balance, force, and tactile feedback. These would be important even for a disembodied AGI, where this module would be needed to simulate physical interactions or design physical objects in the digital space.

The fifth is Musical AI, aka the composer, the artist. While seeming niche, musical intelligence – and I’d say every art form – is about pattern, rhythm, and emotional resonance. Often, breaking these elements creatively is what is known as ‘art’. A specialised AI in this domain could be responsible for the AGI’s understanding of auditory patterns beyond speech, managing rhythm and timing in processes, and even generating creative, aesthetically pleasing structures, skills for tasks requiring a sense of harmony, timing, and pattern recognition in non-auditory data.

The sixth one, Interpersonal AI or the empath, would arguably not only be critical, but also a challenging module to build. Currently, LLMs take care of this function, but we’d need one exclusively trained to understand human emotions, social cues, motivations, and relationships. It would be able to analyse voice tones, facial expressions (via computer vision) and cultural context to infer the emotional state and intentions of the person it is interacting with. This system would advise the central moderator on how to respond in a socially appropriate and effective manner.

The Intrapersonal AI, or the self-reflector, is akin to XAI systems, i.e. explainable AI systems, except in this case it would have the capacity to understand itself, be ‘self-aware’ and have metacognition. This module’s job would be to monitor the internal state of the entire AGI system: the confidence levels of other modules, resource allocation, success and failure histories, and overarching goals for the system.

It would allow the AGI to reflect on its own performance, learn from its mistakes, and understand its own strengths and limitations.

Now, the eighth system in Gardner’s theory would be the Naturalist AI, which, in Gardner’s terms, can identify patterns in nature. For our AGI purposes, it would be a classifier that can perform the same function in the digital world and coordinate with the real, physical world. This is the master classifier, routing problems to the correct specialist module and understanding the broader ecosystem of information in which a problem exists.

THE CONDUCTOR AT THE CENTER

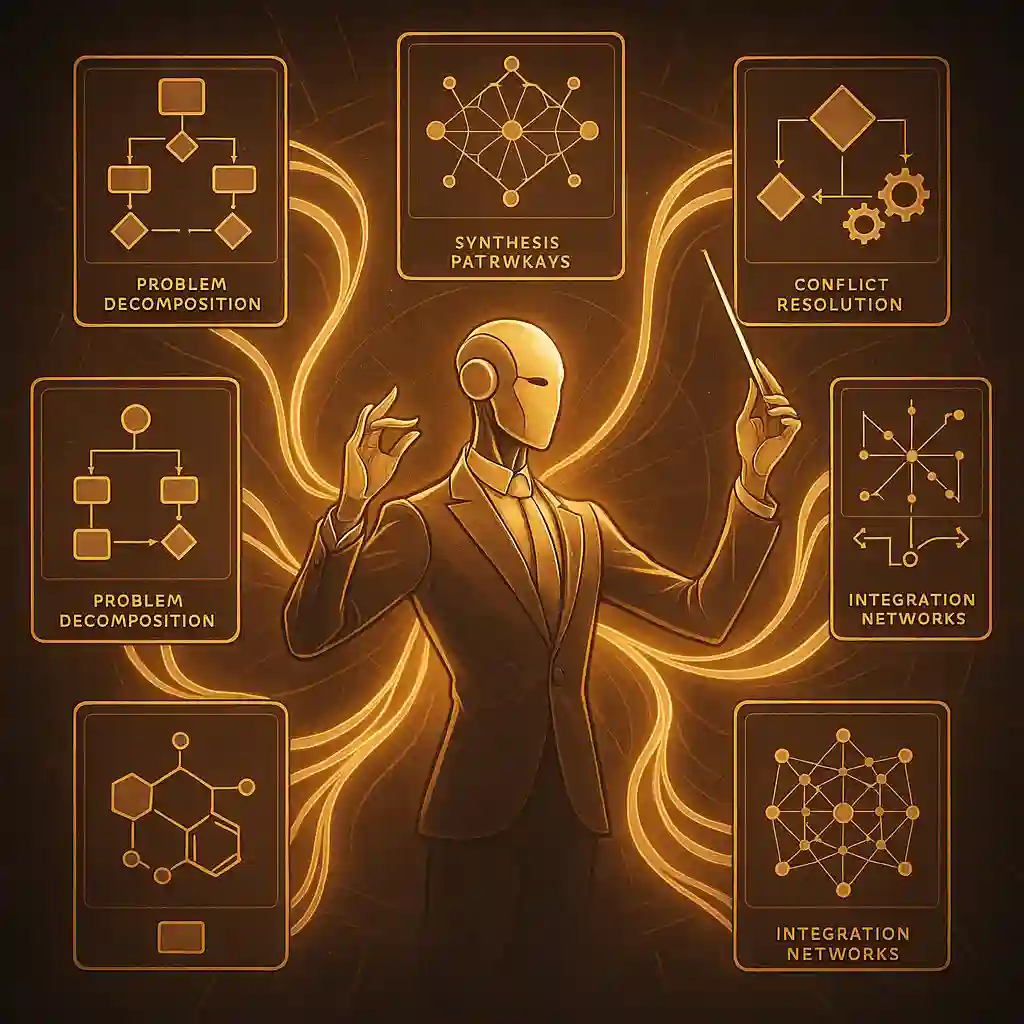

The mere existence of these eight modules in a robot or AI system isn’t enough. The magic, and if we talk in sci-fi terms, AC – artificial consciousness, could happen in the broader integration layer. Call it the conductor of the orchestra, the meta-cognitive integration engine. This system would perform a continuous, dynamic ballet of cognition by problem decomposition – break down a user’s request into different parts; module selection where it identifies the needed expert AI system; orchestration and synthesis where it gathers the results and weaves it into a coherent, unified solution; conflict resolution – reaching a solution in case of differing answers coming from different AI systems for the same query; learning and adaptation where the final result is analysed and learnings parsed from the same for future reference and to better the collaborative process.

This integrator concept, taken from the ideas of modular AGI systems already out there, is not necessarily a ninth form of intelligence but the emergent set of rules, communication protocols, and executive functions that govern the council. Developing this is the fundamental hurdle. It requires a revolution in how we get AIs to talk to each other meaningfully, not just exchange data.

BENEFITS OF MODULAR AGI APPROACH

Such a system, a federated model, a modular approach to AGI, offers significant advantages over the monolithic AGI pursuit. These include robustness and safety, where the failure of one module does not crash the entire system. Explainability, where the system audits its own processes, can explain what it did and why. Less or no hallucination because multiple systems work in parallel, and the outcome is collated, finally leading to less hallucination as there are more checks and balances.

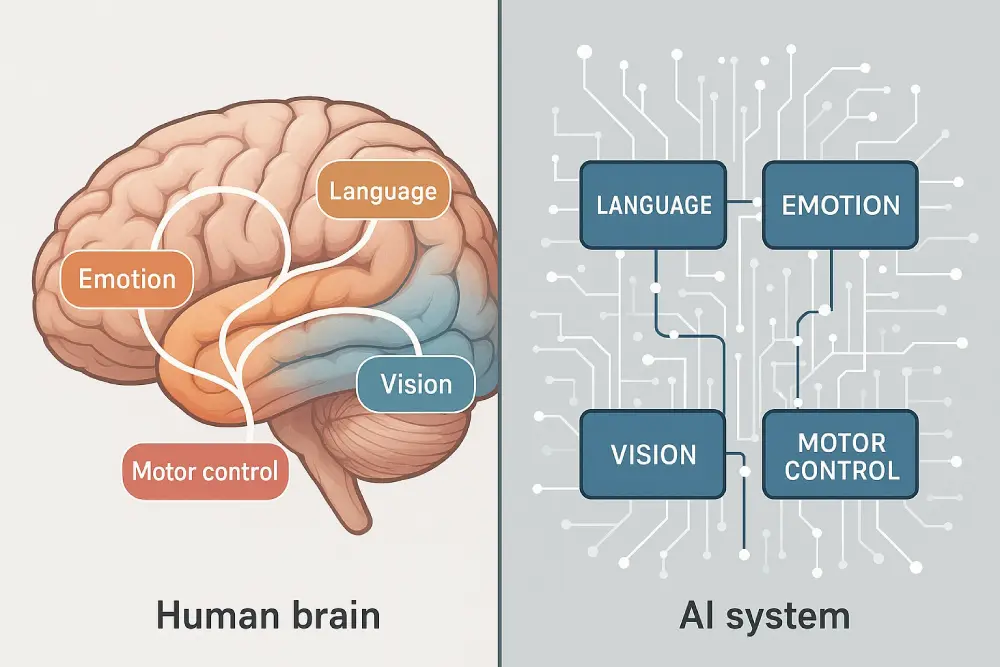

Perhaps this is the way to better machine intelligence, just as it is for a human whose brain is a modular organ with different regions specialising in different tasks like language, emotion, vision, motor control, etc, with our general intelligence emerging from the white matter, i.e. the connective tissue that links these regions together.

The good thing is that there are groups already working on such a modular approach, like one from Georgia, which proposes an orchestrating LLM to break down a vague or complex user query into smaller, manageable sub-problems, which are assigned to specialised agents, with the orchestrator then synthesising these outputs into a coherent solution. The other is the OVADARE framework, designed specifically for conflict detection and resolution in multi-agent environments.

Thus, it seems we are already on our way towards AGI, not to the lofty idea of one algorithm to rule them all, but via a sensible, modular approach to building such a system. Howard Gardner’s theory provides at least a good metaphor, if not a direct map for this uncharted territory. However, it seems clear to many that the myth of a singular, monolithic super-intelligence isn’t what it’s made out to be and that perhaps it is the collaborative model of Multiple AIs that will help us get there.

The idea is not to create a god-like intelligence, but rather to carefully craft one by attaching different systems, so that when we connect them all, we achieve a human-like understanding.

In case you missed:

- Bots to Robots: Google’s Quest to Give AI a Body (and Maybe a Sense of Humour)

- 100x faster AI reasoning with fewer parameters: New model threatens to change AI forever

- Digital Siachen: Why India’s War With Pakistan Could Begin on Your Smartphone

- The Major Threat to India’s AI War Capability: Lack of Indigenous AI

- Quantum Internet Is Closer Than You Think, & It’s Using the Same Cables as Netflix

- How Does AI Think? Or Does It? New Research Finds Shocking Answers

- Pressure Paradox: How Punishing AI Makes Better LLMs

- Your Phone is About to Get a Brain Transplant: How Google’s Tiny, Silent Model Changes Everything

- A Small LLM K2 Think from the UAE Challenges Global Giants

- Can AI Solve Quantum Physics?