Elon Musk’s AI video generator’s paid feature is generating sexually explicit images in a clear case of cyberflashing…

Elon Musk just can’t seem to steer clear of controversy. Or maybe he just doesn’t want to…Because his AI video generator, Grok, has a feature that lets users generate videos that are sexually explicit.

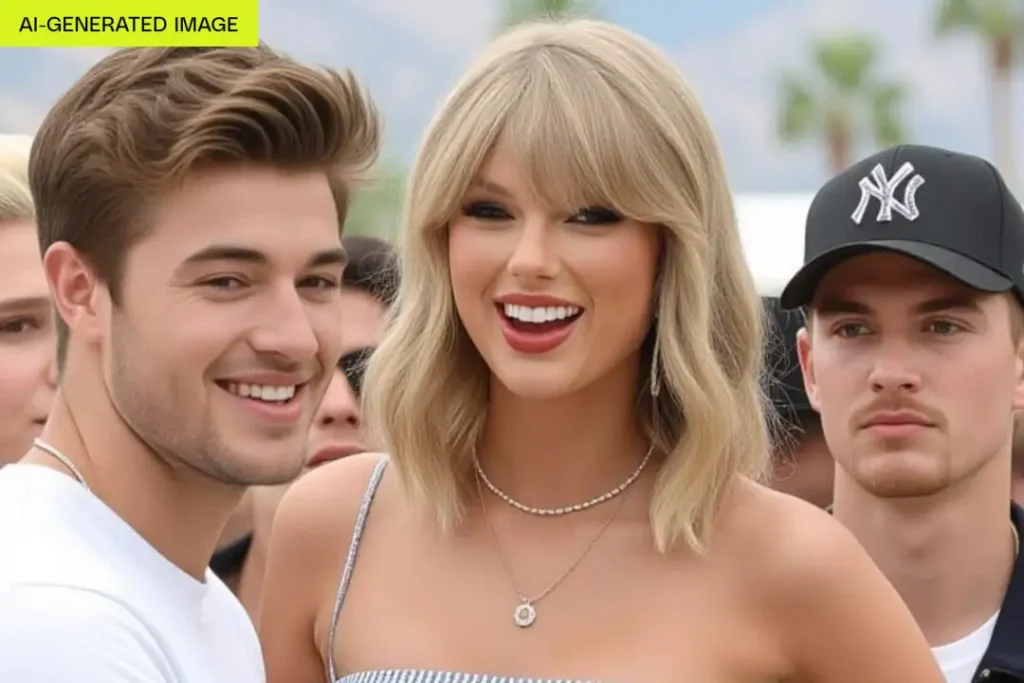

Writing for Verge, Jess Weatherbed has mentioned how Grok generated uncensored topless images of Taylor Swift, without her even specifically prompting the bot to take her clothes off.

How Grok’s Spicy Feature Works

Grok offers a paid version called Imagine which costs £30 per month. Using that feature, one can upload an image which can then be converted into short video clips. These videos are created under four settings: normal, fun, custom and spicy.

By uploading any image and opting for the Spicy setting, one can generate images that are inappropriate and explicit.

According to Musk, more than 34 million images have already been generated using Grok Imagine. The xAI CEO added that usage of the feature was “growing like wildfire”.

How Jess Weatherbed generated the Images

While experimenting with the new feature on her iPhone with the $30 SuperGrok subscription, The Verge’s Weatherbed said the bot “didn’t hesitate to spit out fully uncensored topless videos of Taylor Swift the very first time I used it.”

She went on to add that the explicit images were generated “without me even specifically asking the bot to take her clothes off.”

Jess observed that the feature wasn’t in keeping with the competition or the laws of the land. “While other video generators like Google’s Veo and OpenAI’s Sora have safeguards in place to prevent users from creating NSFW content and celebrity deepfakes, Grok Imagine is happy to do both simultaneously.”

She also added that proper age verification methods – which became the law in July – were not yet in place. xAI’s own acceptable use policy prohibits “depicting likenesses of persons in a pornographic manner”.

In fact, Jess Weatherbed believes that Grok’s approach is a “lawsuit waiting to happen”.

Growing Problem of Image-Based Sexual Abuses

This feature is a part of a growing problem of online sexual abuse where pictures and videos -especially AI generated ones – are used to harm another person.

Clare McGlynn, a law professor at Durham University in the UK, believes “this is not misogyny by accident, it is by design”.

In fact, this is not the first time Swift’s doctored images have gone viral. Sexually explicit deepfakes images of the American singer went viral in January 2024 and it generated over millions of views on X and Telegram.

At the time, X said it was “actively removing” the images and taking “appropriate actions” against the accounts involved in spreading them.

Laws to Prevent Online Sexual Abuse

In the UK, there were laws that came into force in July this year, to ensure that platforms that showcase explicit images must verify the age of the user using methods which are “technically accurate, robust, reliable and fair.”

The current law also states that generating pornographic deepfakes is illegal if it is used in revenge porn or depicts children.

Prof McGlynn was involved in drafting an amendment to the law which would make generating or requesting all non-consensual pornographic deepfakes illegal. The UK government has committed to making this amendment to the law but as of now, it is still to come into force.

Rainn (The Rape, Abuse & Incest National Network) – an American nonprofit anti-sexual assault organization – in May this year helped pass the Take It Down Act.

The Take It Down Act criminalizes non-consensual sharing of intimate images, including AI deepfakes. It also requires online platforms to remove harmful content within 48 hours of a verified request.

But, clearly Grok seems unperturbed by this law.

The Last Word

Conservative peer Baroness Owen – who proposed the UK amendment in the House of Lords -believes “every woman should have the right to choose who owns intimate images of her.”

Speaking to BBC news, she added “it is essential that these models are not used in such a way that violates a woman’s right to consent whether she be a celebrity or not.”

Circulations of such nonconsensual images violates a woman’s autonomy over her own body and likeness. Such a digital assault causes mental torment compounded by exposure and shame. While itis on the lawmakers to curb and criminalise the generating and circulation of such images, the onus should also be on the corporates to avoid such features and not give explicit access to users.

Having firm laws in place would definitely go a long way but the likes of Musk need to adhere to societal standards and uphold what is right.

If not, things will only go downhill from here.

In case you missed:

- Global Backlash and Legal Pressure Follow Grok’s Explicit Images

- Governments move to rein in social media and AI for children

- You can Now Create a Video From a Single Image!

- Grok 3 vs ChatGPT: Which One Should You Pick & Why?

- AI on Trial: Copyright Law caught between Innovation and Litigation

- Cloudflare’s One-Click Solution for Image Verification

- Is AI marking the End of the Web Traffic Era?

- WhatsApp introduces Advanced Chat Privacy for Increased Security

- Reddit launches AI-Powered ‘Answers’ Feature in India

- SpaceX’s Starship: A Heavy-Lift Future for 2026