Refine your prompts using the techniques we have outlined above and it will go a long way in helping AI do its job better.

Generative AI chatbots might be helping transform the business landscape, but they aren’t without their problems. One of their most significant issues is that when they’re asked a question, they present inaccurate or plain made-up information as if it’s correct – and the results are amusing, to say the least. For instance, when someone asked ChatGPT a ridiculous question about when the Golden Gate Bridge was transported across Egypt, it spouted an entirely unbelievable date of October 2016. These mistakes occur up to 20% of the time and are known as AI hallucinations. They happen when AI models are left with too much freedom, thus ending with them providing inaccurate or contradicting information and leading to ethical concerns and mistrust. So, can we employ generative AI to get good-quality answers? The answer is yes, and this article delves into specific ways to reduce these hallucinations – it’s all about how one asks the questions.

Avoiding vagueness and ambiguity

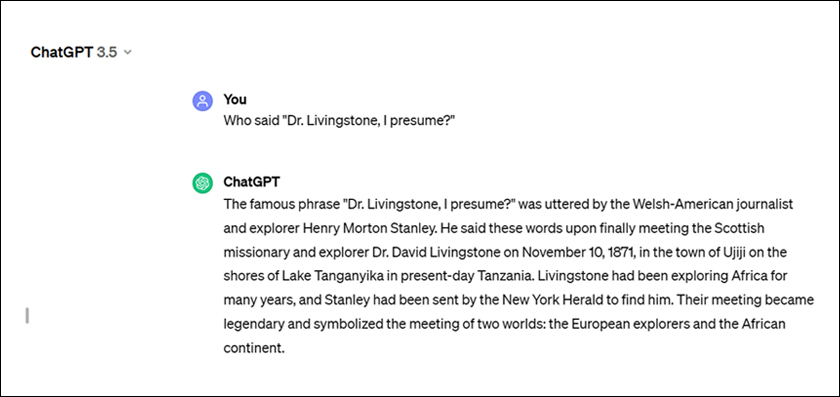

Interestingly, AI doesn’t respond well to unprecise or unclear prompts. So, when prompts don’t provide sufficient detail, they give AI the space to confabulate and fill in the details that it left out. For instance, when asked who said, “Dr. Livingstone, I presume?” ChatGPT 3.5 immediately threw up this widely-known answer:

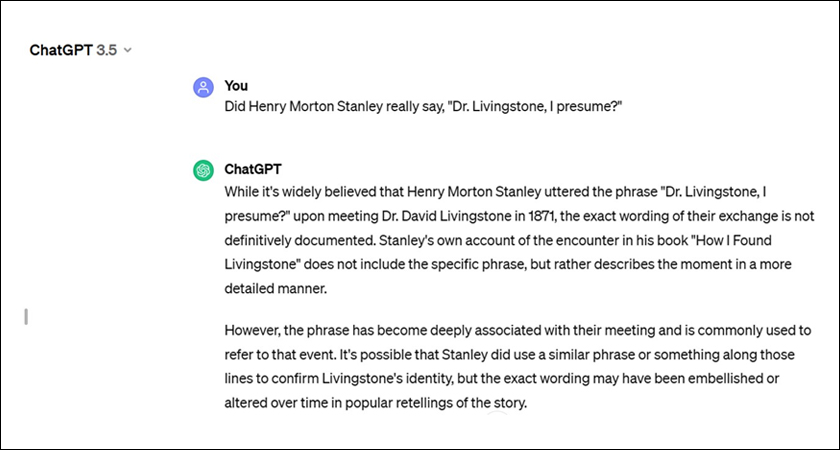

However, when we specifically asked whether Henry Morton Stanley really said those words, this is what the answer was:

In this prompt, the ambiguity was buried in the details. While the first prompt was open-ended, the second prompt asked for more specific, verifiable information and thus returned an answer that was different from the first one.

Experimenting with Temperature

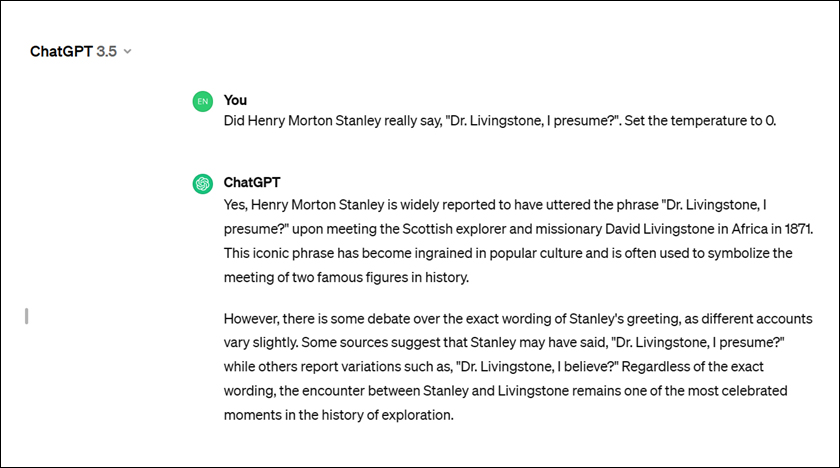

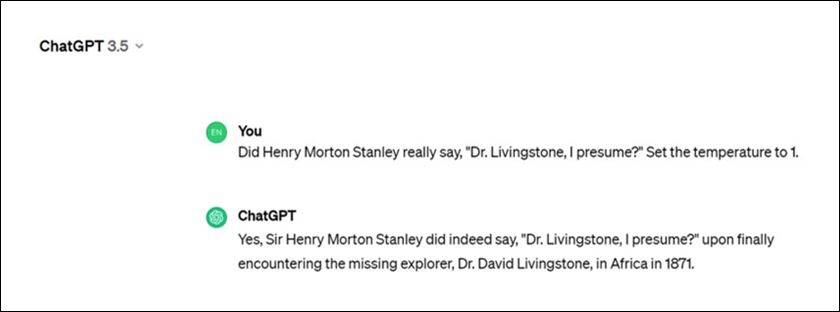

Temperature settings also play a massive part in AI hallucinations. The lower the temperature settings on a model, the more conservative and accurate it is. The higher its temperature setting, the more the model gets creative and random with its answers, making it likelier to “hallucinate.”

This is what happened when we tested ChatGPT 3.5’s hallucinations with two different temperature settings. So, as you can see, changing the temperature setting to 1 changed the model’s answer, as opposed to the same question it was asked earlier with a temperature setting of 0.

Limiting the Possible Outcomes

Open-ended essays tend to give us much more freedom than multi-choice questions. Thus, the former ends up creating random and maybe even inaccurate answers; the latter basically keeps the correct answer right in front of you. Essentially, it homes in on the knowledge that’s already “stored” in your brain, allowing you to use the process of elimination to deduce the right answer.

When communicating with the AI, You must harness that “existing” knowledge.

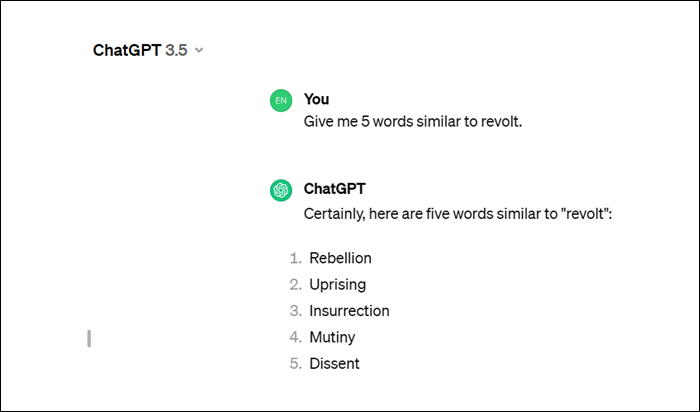

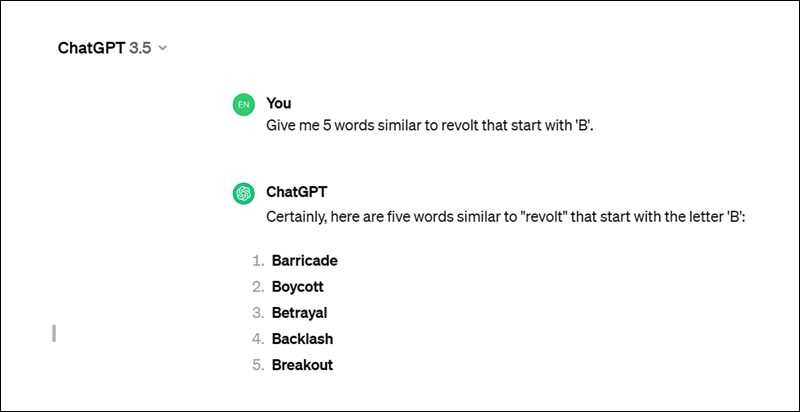

Take the above open-ended prompt, for example. We tried to limit the possible outcomes further by specifying what words we wanted in a second prompt below:

Since we wanted words similar to revolt starting only with a specific alphabet, we pre-emptively avoided receiving the information we didn’t wish to with the second prompt. Of course, chances are that the AI could also get sloppy with its version of events, so asking it to exclude specific results pre-emptively could get you closer to the truth.

Merging unrelated concepts or bending realities

As someone who enjoys science fiction to a fault, I love everything, from alternate reality to speculative scenarios. However, prompts that merge unrelated concepts or mix universes, timelines, or realities throw AI into a tizzy. So, when you’re trying to get clear answers from an AI, avoid prompts that blend incongruent concepts or elements that might sound plausible but are not. Since AI doesn’t actually know anything about our world, it will essentially try to answer in a way that fits its model. And if it can’t do so using actual facts, then it’ll attempt to do so, resulting in hallucinations and fabrications.

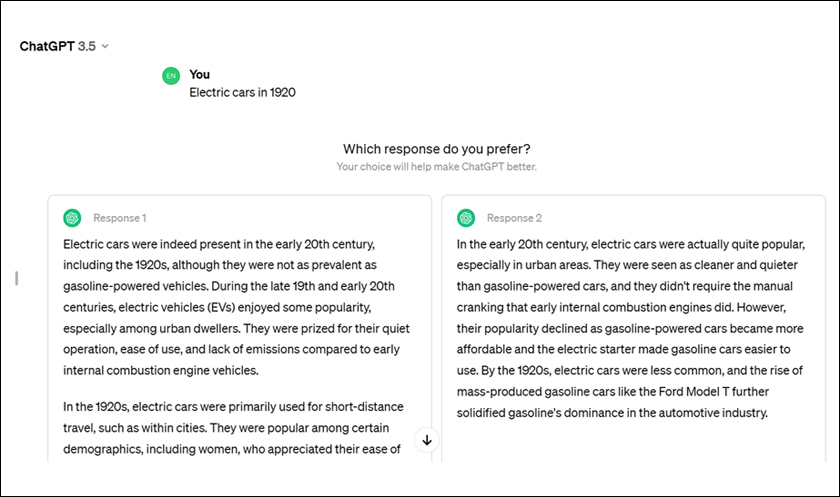

However, there’s a catch. Take a look at this prompt, where we asked about electric cars in 1920. While most would laugh it off, the first electric cars were invented way back in the 1830s, despite being a seemingly modern innovation. Furthermore, the AI gave us two responses. While the one on the left explains the concept in detail, the right one, while concise, is misleading despite being factually accurate.

Verify, verify, verify

Even though AI has made giant strides in the last few years, it could become a comedy of errors due to its overzealous storytelling. AI research companies such as OpenAI are acutely aware of the issues with hallucinations and are continuously developing new models that require human feedback to better the whole process. Even then, whether you’re using AI to conduct research, solve problems, or even write code, the point is to keep refining your prompts using the techniques we outlined above, which will go a long way in helping AI do its job better. And above all, you’ll always need to verify each output.

In case you missed:

- Safe Delivery App and the NeMa Smart Bot: How AI Is Aiding Safer Births Amidst Limited Resources

- Millennials And Gen Z Are Using AI For Investment. How Prudent Is It?

- Can We Really Opt Out of Artificial Intelligence Online?

- All About DeepSeek, the AI Disruptor

- The Internet’s AI Slop Problem

- The Next Phase of AI: Deep Research

- The Life of Pi Network – FAQs and Everything Else You Want To Know

- Phantom Wallet: The Fastest-Growing Crypto Wallet

- The Pi Mainnet Launch – What It Heralds For The Pi Coin and Pioneers

- Zero Trust Architecture: The Next Big Thing In Security