How many of us, if we got the chance, would use generative artificial intelligence (AI) to write an essay or finish an assignment? Would it pinch your conscience?

On the other hand, what’s worse is working hard on your class assignment to write an entirely original piece of work, only for your work to be called out and accused of being AI-generated. The AI crisis is dual-sided, it has reached education, and it’s real.

The Evolving Landscape of AI Cheating

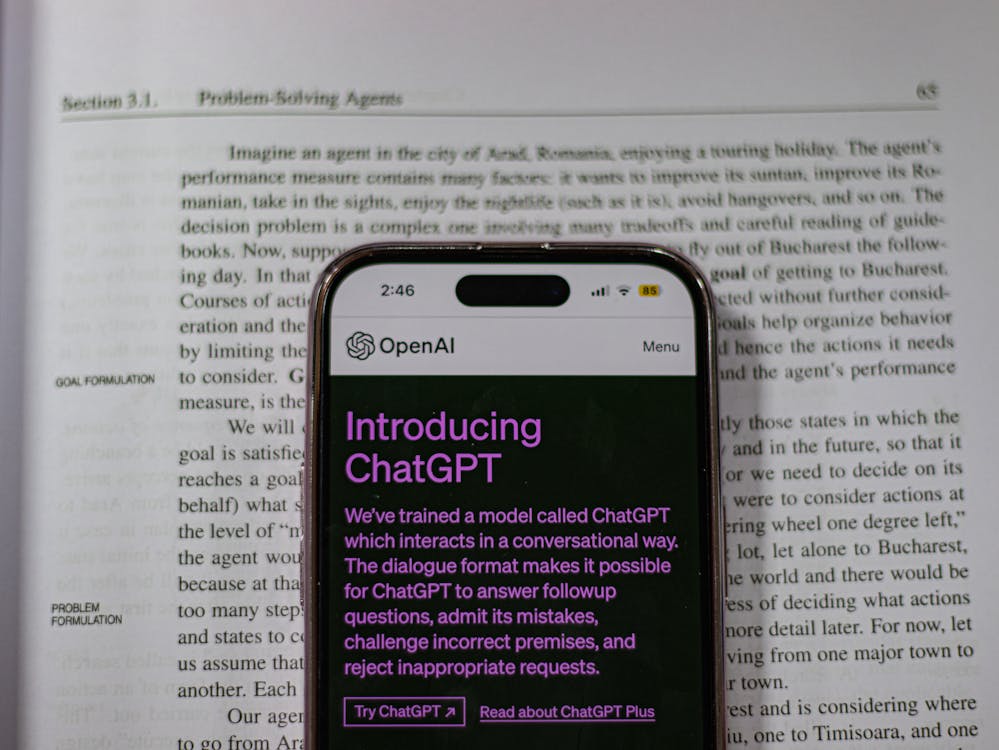

Just one week after ChatGPT made its way into the world in November 2022, The Atlantic made a bold declaration for that time – “The College Essay Is Dead.” Educators mourned the death of originality and skillsets, and they searched for ways to battle what would become a full-blown crisis. Nearly two and a half years later, ChatGPT has shaken numerous industries, and education is no different.

Today, it’s possible for almost anyone to enter a few basic inputs and produce passable written work within a matter of seconds. LLM ((large language model) tools like Perplexity, Claude, Microsoft CoPilot, and Google Gemini absorb and process huge amounts of data, generating new material.

The first year of the “generative AI college” happened at a vulnerable time, right after the pandemic. It might have ended in a feeling of dismay for educators, but now the situation has devolved into absurdity. Teachers are struggling to teach, even as they wonder whether they’re grading students or computers.

This, even as an endless loop of AI-cheating-and-detection plays out in the background. As fairy godmother-esque as using AI has been for students, it’s become a nightmare for educators.

Collateral Damage

Is the actual problem the universities need to grapple with ChatGPT or is it something else altogether? As the AI student cheating crisis deepened, technologists and educators began to deal with the problem: by using anti-cheating software. These advanced AI writing detection technologies, like GPTZero and Turnitin, scan submissions for signs of plagiarism. Since the idea is to counteract a surge in AI-written assignments, this certainly seemed like a miracle drug.

The problem? They’re also not 100% reliable. Not only have there been widely reported cases of false positives, but also the less-than-1% rate of error doesn’t work due to the behemoth student population.

The result is that many students who haven’t used AI at all have found themselves in the line of fire, being accused of cheating. While some were accused due to usage of signpost phrases like “in contrast” and “in addition to,” others have been put on academic probation for using Grammarly, a spellcheck plug-in using AI, for proofreading their work, despite it being listed as a recommended resource.

Students who haven’t used AI for their work have ended up becoming the biggest collateral damage to the AI student cheating crisis. Along with false allegations also come concerns about forfeiture of academic and merit scholarships and loss of visas for international students, hugely impacting their mental health and well-being – and their future. So much so, that students are now being bombarded with advice on what they need to do if they’ve been falsely accused of using AI to cheat.

The Problem Continues

The problem with using AI detection tools, which is now widespread, is that students who’re using AI to cheat aren’t simply cutting and pasting text from ChatGPT. Instead, they’re editing it, moulding it into their own work. If that wasn’t enough, there are now even AI “humanisers,” such as StealthGPT and CopyGenius, which “humanise” AI-written text! There are students at a disadvantage here too, as not everybody can pay for the most advanced AI tools.

Another finding was that even fairly accurate AI detectors fail outright at times when faced with technical challenges and simple evasion techniques that otherwise wouldn’t bother human evaluators. For instance, using homoglyphs, removing grammatically correct articles, selectively paraphrasing, introducing misspellings, and adding whitespace to the text saw accuracy drop to as less as 22.1%.

That’s not all, unfortunately. There’s also some evidence to suggest that AI detection tools are disadvantageous to some demographics. For instance, a Stanford study found that numerous AI detectors flagged the work of non-native English speakers 61% of the time. This, as compared to flagging the work of native English speakers just 5% of the time.

In November 2024, Bloomberg Businessweek reported a case where a detection tool falsely flagged the work of a student with autism spectrum disorder as being AI-written. Even those students who write using simpler syntax and language, and neurodivergent students, seem to be disproportionately affected by these detection tools.

The Bottom Line

The fact is that academic cheating has always existed, but is now being propelled forward by technology. AI has infested higher education, hollowing out sound structures from within until their imminent collapse. This reflects both student pressure to excel as well as the sophistication levels of AI.

In the struggle to undo the damage, educators and students are now deadlocked in an escalating technological arms race. Cheating or no cheating, the atmosphere of suspicion on campuses is here to stay. The larger issue, though, is that higher education is now left feeling so very impersonal.

In case you missed:

- Should Children Be Talking To AI Chatbots?

- Is Generative AI Spinning a New World Wide Web?

- Hiding In The Dark: Navigating The Threat Of Shadow AI

- Google AI Is Quoting YouTube Videos For Health And Medical Queries. Should We Be Worried?

- The Next Phase of AI: Deep Research

- The AI Slop Crisis: Brainrot and the Decline Of Web Content

- All About AI Prompt Injection Attacks

- How AI Can Fortify Cryptocurrency Security

- AI + Web3: Making A Smarter Internet

- All About India’s Indigenous AI LLM Models – Can They Help Tackle Bias?