AI has come alive: has been the refrain of the world after a Google researcher claimed so. But has it really, wonders Satyen K. Bordoloi as he delves into the reasons for our sentient AI fetish

“It’s alive! It’s alive!” These portentous words from the 1931 movie Frankenstein best captured the singular sentiment about Artificial Intelligence the entire past week. This was prompted after a Google employee Blake Lemoine was so flabbergasted by the responses of LaMDA – a language mode AI – to his questions that he went rogue and released his interactions to the world. Google, on their part, responded by putting Blake on leave, giving fodder to AI alarmists.

Is AI alive? We have been grappling with this question for half a century with countless books, movies and series dedicated to it. Today with computing power having grown so much, and with-it AI, Machine Learning (ML) and Deep Learning (DL) – especially with Quantum Artificial Intelligence around the corner – it is important to tackle this question.

To anyone who’s ever dived a little below the surface hype surrounding it, AI – thanks to ML and DL, is nothing but a new way of computing. To those who go deeper, it’s not even so new for the concepts of neural nets, ML and DL were outlined back in the 1950s.

What is new though, and why AI – despite many promises and investments in it especially in the 1970s – did not burst out into the scene as it did first in 2011 – is hardware. When the power of computing hardware exploded in the 1990s leading to amazing devices, there was finally enough computing power to put AI theories to test. The one who did it first, best, and most, was Google.

The other thing to understand about AI is that despite claims by naysayers, neural nets that make up AI, copy a very small part of how the human brain works. Instead of linear, vertical, and singular nodes of logic, what AI does is simultaneous, horizontal and multitudes of nodes of logic working and feeding values off each other based upon its initial programming and later, tasks assigned.

Despite the hoopla surrounding it, neural nets and thus AI mimics not even 1% of the human brain. This is mainly because we ourselves know little of all the functioning of the human brain. We are still trying to understand well how the brain cells connect to do what they do, and most importantly, how they create this magic called consciousness. We are at least dozens of years, if not hundreds – before we understand these complex functions.

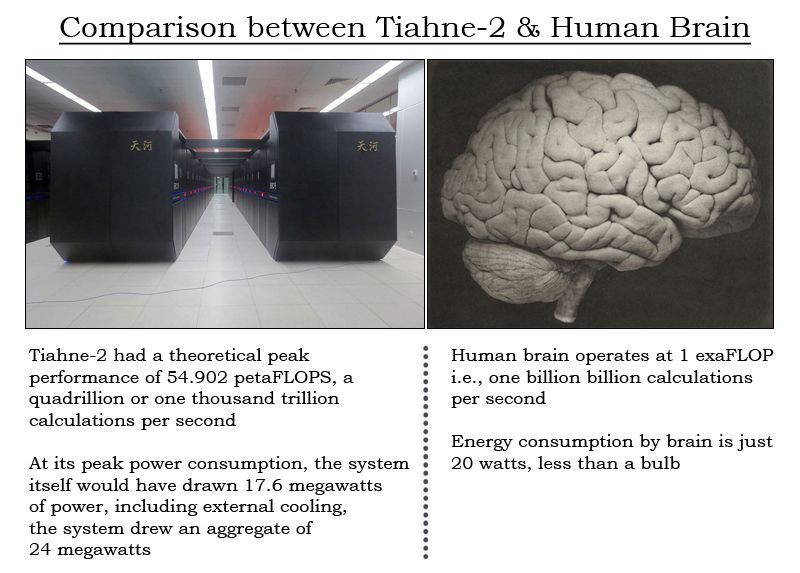

Let’s take just one example of how little we know about the human brain. One of the supercomputers, the Tianhe-2 in Guangzhou, China – can at best process 54.902 petaFLOPS i.e. a quadrillion or one thousand trillion calculations per second consuming thousands of watts of power to do so. The human brain meanwhile can do 1 exaFLOP i.e. one billion billion calculations per second while consuming just 20 watts. How does it do it? We have some ideas, but not enough.

What we have done with AI is mimic a small idea of how axons and dendrites in the human brain work. AI thus does not copy the human brain even partially – only fractionally – and hence cannot have the kind of consciousness that humans do by a long shot. At least not for a few dozen or few hundred years till we unravel the mystery of the human brain and consciousness.

Why was the Google researcher, and a lot of people fooled then? The problem with Blake Lemoine specifically is his religiosity. He claims that his religious beliefs convinced him that LaMDA has a soul. However, Blake is not the only one to think AI could become sentient soon.

The key to the mystery of sentient AI lies in an incorrigible human habit of anthropomorphizing everything i.e. we give human characteristics to almost everything we can. Open any animation channel and you’ll see the extent to which we do so. Cars talk, and so do books in many of these ‘cartoons’. Rocks fight and household utensils like cups and saucers, tables, and chairs have an attitude and an opinion. From Walt Disney to Pixar today – both of whose logos anthropomorphize a mouse and a table lamp respectively – fortunes have been made on our ability to turn anything and everything human. Even gods have been created in our image, not the other way around.

Now imagine a poor guy, so what if he is an AI researcher, ‘chatting’ with AI and that AI sprouts out answers that are not only legible but at times make profound sense. To think of the one giving the answer in human terms is a very.. human folly. This even when knowing full well that what the AI system is doing, is taking bits from the humongous dataset it has been fed, to create a coherent answer to the questions put to it. Initial AI models gave gibberish answers but as we have developed and honed the technology, AI has become so good it fools its creators.

To give an analogy, an AI developer believing that AI has come alive is akin to a magician believing that his magic is real. What would you say to David Copperfield if he claimed that his disappearing the Statue of Liberty was real and not a magic trick?

Blake Lemoine isn’t the only one to make atrocious claims about AI and being proven wrong and having to eat humble pie. The best example is of Elon Musk who has been warning of AI in apocalyptic terms, while ironically making hundreds of billions of dollars from pushing AI into new directions. Yet, despite claiming every year since 2014 that a fully self-driven car is less than a year away, he has not been able to crack AI for cars. Why? Because AI is not only not alive but is proving too dumb to drive a car entirely on its own which even the dumbest human does easily.

What Musk has found, though he doesn’t admit it, is that it is incredibly tough to give even basic sentience to AI, enough for it to drive a car without supervision. AI is not alive and to make it act like it is, is currently Musk and every other AI company’s biggest challenge.

What Musk’s and the Google engineer’s fearmongering instead does, is prevent people from investing in the research and development of AI. After over a decade of home runs, there are talks of another AI winter with investment in key areas of AI drying up, right when it is needed the most.

That LaMDA seems real enough even to one of its creators, is a fact that needs to be celebrated, not feared. It shows how far we have reached with AI research and how far we can go if we keep on the path. If by mimicking our brain so little we can come so far, how much further can we go with more. And most importantly, how many of our many global problems such AI assistance can solve?

Before we fearmonger against AI, we must remember one simple truth: AI is no longer a novelty, it is essential for human survival. The fate of humans, and this planet, rests on how best we use AI to solve our problems.

In case you missed:

- The Path to AGI is Through AMIs Connected by APIs

- How Does AI Think? Or Does It? New Research Finds Shocking Answers

- One Year of No-camera Filmmaking: How AI Rewrote Rules of Cinema Forever

- You’ll Never Guess What’s Inside NVIDIA’s Latest AI Breakthrough

- AI Taken for Granted: Has the World Reached the Point of AI Fatigue?

- Is Cloud Computing Headed for Rough Weather

- Bots to Robots: Google’s Quest to Give AI a Body (and Maybe a Sense of Humour)

- Anthropomorphisation of AI: Why Can’t We Stop Believing AI Will End the World?

- Prizes for Research Using AI: Blasphemy or Nobel Academy’s Genius

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

2 Comments

Nice post. thanks for sharing this informative information.

would like to become a data scientist? I heard that Learnbay provides a data science course in Canada.

their students work on real-time projects. it provides courses like data science, AI, and machine learning.

for more information visit the site:

https://www.learnbay.co/data-science-course/data-science-course-in-canada/

Nice Article! Thanks for sharing with us.