With climate change at the doorstep, Adarsh looks into the potential future of data centers

Citing environmental concerns, operational costs and the need for superfast connectivity, major market players like Microsoft and Google are looking to shift their data centers underwater.

A study by Datareportal earlier this year revealed that over 5 billion people around the world use the internet every day. In other words, 63.1 per cent of the world’s total population is accessing data online.

The study also revealed that internet users are increasing at an annual rate of almost 4 per cent. This means that two-thirds of the world’s population should be online sometime in the second half of 2023.

As the number of people accessing the internet continues to increase, there is a need to improve the physical infrastructure that’s needed to support all that data. Cloud computing is an integral part of every software solutions provider and has seen a surge in demand over the course of the last decade.

The Need to go Underwater

The multitude of server networks in these data centers consume a lot of power and pump out a lot of waste. They are also high maintenance and suffer a lot of corrosion due to the oxygen and humidity on land. Temperature fluctuations and power failures also affect the effectiveness of the data centers and the maintenance cost for this is unimaginably high.

That’s not all! Another area of concern is the scarcity of space. With the surge in the need for more data centers around the globe, on-land options are bound to run out in the next 10 years.

This is why the outrageous idea of underwater data centers was first proposed.

The Out-of-the-box Idea!

Microsoft hosts an annual event called ThinkWeek where it urges employees to share out-of-the-box ideas. During the 2014 ThinkWeek, an underwater data center was proposed as a potential way to provide lightning-quick cloud services to coastal populations while also saving energy.

The reasoning was that more than fifty percent of the world’s population lives within 120 miles of the coast. With underwater data centers near coastal cities, the data would only have to travel a shorter distance, thereby speeding up the surfing and streaming requirements of the masses.

The Switch to Underwater

After the idea was received and researched, phase 1 of Project Natick was conducted in the Pacific Ocean in 2015. A relatively small data center -10×7 feet in dimensions and weighing 38,000 pounds – was deployed underwater for just over 3 months.

After 105 days, it was retrieved from the ocean bed and extensive studies revealed that it had delivered expected results with minimal complications and drastically less power consumption.

So in 2018, Microsoft launched phase 2 of Project Natick. This was an extensive three year project which would involve submerging a full-fledged data center with 12 racks and 864 servers underwater. Scotland’s Orkney Islands was chosen as the location because of its popularity as a renewable energy research destination. Tidal currents that are 9 miles per hour and waves that reach 10-60 feet high make it an intriguing site to evaluate the economic viability of a fully submerged data center.

For the next two years, researchers tested and monitored the performance and reliability of the data center’s servers. When this data center was retrieved at the start of 2020, it was noted that there were just a handful of failed servers and related cables.

Though all the reasons for the same are still being researched as to why, the underwater data center was found to be 8 times more reliable than its on-land counterpart. So as far as Microsoft is concerned, Project Natick has been a grand success.

The War for Water

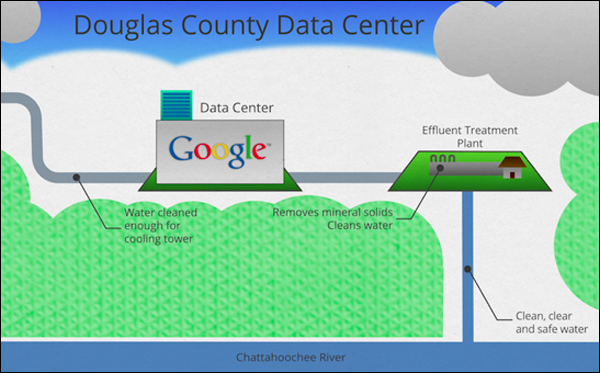

From 2007 to 2012, Google was using regular drinking water to cool its data center in Douglas County, Atlanta. But after environmental conversationalists started filing lawsuits, the company switched to recycled water to help conserve the Chattahoochee River. But this is proving difficult as recycling options are not available in all the locations where they have data centers.

Google has also been very closed about its water usage. It considers it a proprietary trade secret but information continues to leak out as part of legal battles and lawsuits. In 2019 alone, it was reported that Google either requested or was granted over 2.3 billion gallons in three different states in the USA. Google however has insisted that they don’t use all the water they are granted.

As the company continues to chase Microsoft and Amazon in the cloud-computing market, things are bound to get more complicated in the future. As of now, Google has 21 data centers but it intends to spend USD 10 billion on data centers in the next year alone.

The Future of Underwater Centers

The overall reliability of a data center in a sealed container on the ocean floor is great for environmental, economical and connectivity reasons.

Microsoft, Google and Amazon are already looking to serve customers who need to deploy and operate tactical and critical data centers anywhere in the world. Wherever possible, they are looking to deploy these centers off the coast.

Another great motivation to relocate underseas is Microsoft’s aim to being carbon-negative by 2030. The company intends to do this by developing long-term cloud infrastructure. In addition, it hopes to have entirely shifted its technical reliance on renewable energy sources by 2025.

All in all, just like cloud computing is here to say, so are underwater data centers. It might seem intriguing now but very soon, it looks like it is going to become the norm!

In case you missed:

- Presenting Wiz: Google’s $32 Billion Push on Cybersecurity

- AI Chaos: Why OpenAI, Google and Microsoft Keep Shifting Strategies

- Microsoft enhances AI Copilot with Voice, Vision & Deeper Thinking

- OpenAI is now Focussing on Superintelligence!

- India rises to 2nd Place in Global Smartphone Sales, 3rd in Market Value

- Meta’s Puffin Project: Future of Mixed Reality in a Pair of Glasses

- Google launches ‘Cheap AI’ to Combat Rising Costs & Chinese Competition

- How AI is Revolutionizing Combat for Indian Defence Forces

- 15 Billion Transactions a Month! How UPI is transforming India’s Digital Economy

- Active Listening Feature on Phones raises Privacy Concerns