The world is producing so much data, there is a real danger of running out of storage if current trends continue writes Satyen K. Bordoloi while outlining solutions

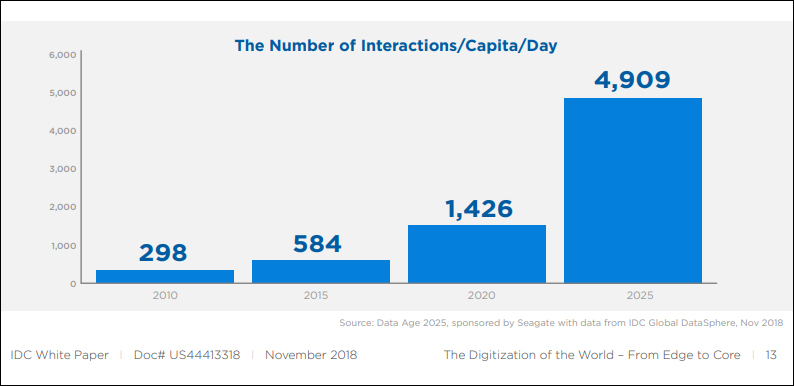

Bend the wrist, adjust the screen for an imperfectly cute frame, say ‘oooo‘ (not smile) and press the red button on screen to click a selfie whose bits float through cyberspace and get saved in the ‘cloud’. You didn’t even think once before doing this. But you just added your 3 megabytes (MB) to, hold your breath, over 2.5 quintillion bytes of data the world generated today.

Data might be the new oil, but there is so much of it being generated daily, that there is a serious danger of running out of space to store it. Don’t believe it? Consider these insane statistics.

At the beginning of 2020, the total amount of data in the world was estimated to be 44 zettabytes (ZB). A single zettabyte is 1000 bytes (or about 1 kilobyte) to the seventh power. If you were to write it down, one zettabyte would have 21 zeros, equal to around 660 billion Blu-ray discs, 33 million human brains or 330 million of the world’s largest hard drives.

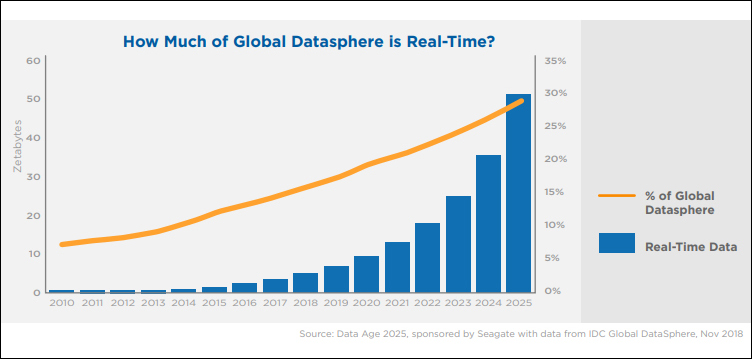

Then came the pandemic and in 2020 itself, according to an International Data Corporation report, the world created or replicated 64.2 ZB of data. The report noted: “data creation and replication will grow at a faster rate than installed storage capacity.” We could run out of hard disk drives (HDD) to store it.

The saving grace for 2020, was that only “2% of this new data was saved and retained into 2021 – the rest was either ephemeral (created or replicated primarily for the purpose of consumption) or temporarily cached and subsequently overwritten with newer data.

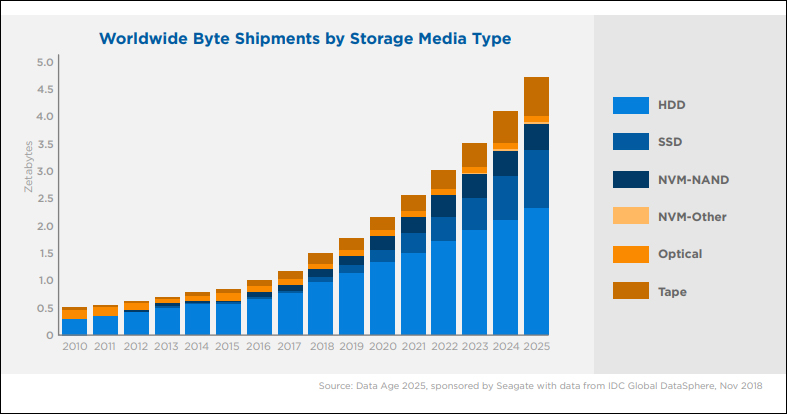

However, with the volume of data globally doubling every two years, expected to reach 175 ZBs by 2025 – with 90 ZB of it coming from Internet of Things (IoT) devices and growing at a CAGR of 23% thus outpacing the 19.2% CAGR growth of global storage capacity, we realize we have a big problem.

FLASH FACTS

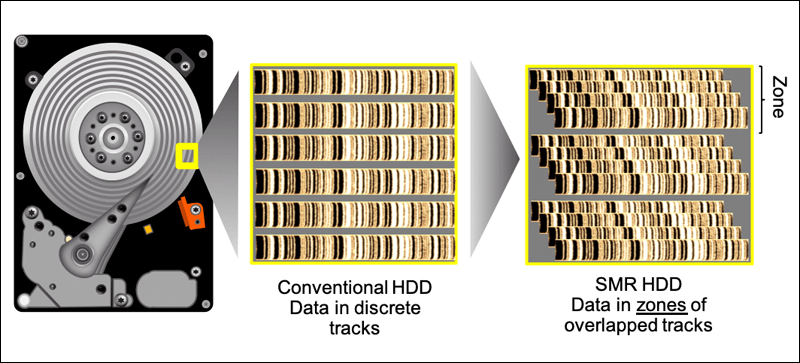

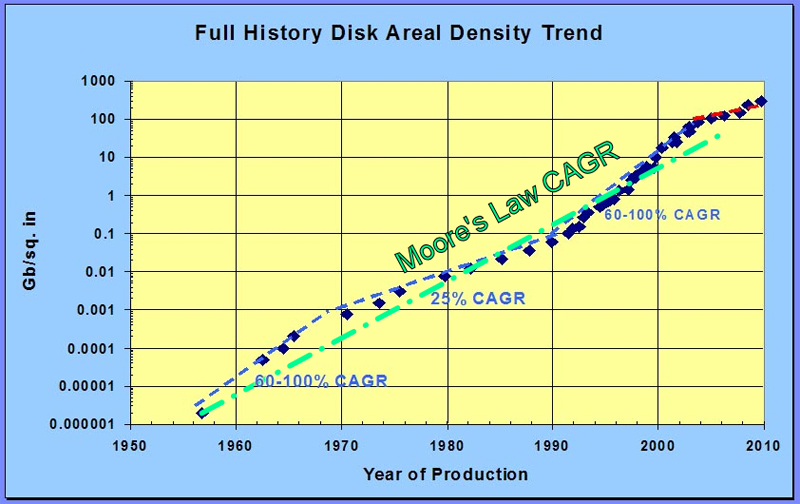

Use of flash drive storage with higher speeds is increasing compared to HDD (hard disk drives). However, HDD last longer, are more reliable, and cheaper. Hence, solutions are being worked around to increase HDD speed and capacity with Shingled Magnetic Recording (SMR) to increase area density by ensuring that read and write tracks overlap.

But it has its limitations, so manufacturers are introducing new technologies including HAMR (heat-assisted magnetic recording), MAMR (microwave-assisted magnetic recording), OptiNAND -augmenting disk drive controllers with a small flash drive to store metadata – and NVMe (nonvolatile memory express).

This has already increased capacity and Western Digital and Seagate have released 20 TB hard disks for cloud storage and Toshiba has an 18 TB one, all using one or a mix of these technologies.

Hard disks with still larger capacities are already on the way with other approaches being tried out including two-dimensional magnetic recording (TDMR) to deliver 20% boost in density, energy-assisted magnetic recording (EAMR), heated-dot magnetic recording (HDMR) – a proposed enhancement of HAMR.

In 2020, Showa Denko (SDK), a Japanese platter manufacturer, announced the development of a new type of hard disk media using HAMR that is expected to have capacities as high as 80TB. It uses thin films of Fe-PT magnetic alloy – one of the most powerful magnetic materials with high corrosion resistance, alongside a new structure of magnetic layering and controlling temperature to create a new product with higher magnetic coercivity than all existing media claimed SDK in a release.

The other approach is using software to detect empty spaces, maximize use of available storage and thus improve capacity. Storage virtualization is when software identifies free storage from multiple physical devices and reallocates them in a virtualized environment for optimal use of available capacity. By using storage effectively, it helps increase capacity without needing to buy new storage. Think of it like rearranging a packed clothes shelf to discover that there’s space to hold a few more.

Radical notions

Ever since the dawn of the computer age, newer materials have been discovered to store more data in smaller spaces. In 1998 there were talks of a new oxide material that allowed the use of a phenomenon called colossal magnetoresistance to improve storage capacity.

In 2017 Scientists at the National University of Singapore’s Department of Electrical and Computer Engineering announced that they had combined cobalt and palladium into a film that could house stable skyrmions to store and process data.

In 2015, researchers at the U.S. Naval Research Laboratory (NRL) imparted magnetic properties into graphene and thought they had developed a method to make graphene capable of data storage with a million-fold increase in capacity compared to today’s HDDs. Last year, however, another research on a similar tech to use graphene as data storage claimed it can store ten times more data than today’s conventional storage.

As evidenced from the graphene example above, there are many a slip between the cup and the lip. A lot is possible in the lab but when put out into the real world, reality hits. The other problem is funding. A new idea, without funding to actualize it into real-world applications, will remain just that as evidenced countless times in the last 200 years of scientific research. Take the case of fax machines or lithium-ion batteries, invented first in 1843 and 1912, respectively.

The biggest promise is in the rapid advancements of quantum computing that can use physical quantum mechanical processes like superposition and entanglement to create not just processing devices but also a way to potentially store a huge amount of data as qubits – quantum bits. This is mostly in the theoretical stages though both private corporations like Google and nations like China are making giant leaps in that direction. If and when it happens, quantum computing and quantum memory will change the entire computing ecosystem of the world, especially how and how much data we can store. But at a practical level, these devices could be decades away from actual practical application.

As per researcher Melvin M Vopson, at the rate we produce data today – 1021 digital bits every year and assuming that we have a 20% growth rate, after 350 years the data we produce will exceed the number of atoms on earth.

Within 300 years, the power required to sustain all this production would be more than what the entire planet consumes today and in 500 years, the weight of digital content will account for half of the planet’s mass.

This means, an information catastrophe is on the horizon.

So the next time you click a selfie, do think for a moment if the photo is one you’d like to view a year later. If not, delete it. It will be a huge favor done to global data storage capacities, and the planet, if each one of us reduces our data storage load even a little bit. Data-sustainability is a fringe term today, but it’s not long before it becomes as serious as climate change.

In case you missed:

- And Then There Were None: The Case of Vanishing Mobile SD Card Slots

- Microsoft’s Quantum Chip Majorana 1: Marketing Hype or Leap Forward?

- Why a Quantum Internet Test Under New York Threatens to Change the World

- AI Taken for Granted: Has the World Reached the Point of AI Fatigue?

- A Data Centre on the Moon – From Sci-Fi to Necessity

- You’ll Never Guess What’s Inside NVIDIA’s Latest AI Breakthrough

- Quantum Internet Is Closer Than You Think, & It’s Using the Same Cables as Netflix

- Google’s Willow Quantum Chip: Separating Reality from Hype

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- AIoT Explained: The Intersection of AI and the Internet of Things