Nigel Pereira goes beyond the bleeding edge of data center technology to reveal the future of this revolutionary tech

If you read our previous post on the evolution of data centers, we ended on the idea of hybrid ones that can encompass multiple public clouds, private clouds, and on-premises resources.

With this post, we are going to go beyond and talk about ‘serverless’ data centers and how they are a product of Serverless Computing and Hardware Disaggregation.

While both are inherently separate movements that have been gaining momentum with the proliferation of cloud computing, bringing them together seems like a brilliant choice if the end goal is to be a better end-user experience in the cloud.

What is Serverless Computing?

Serverless computing arose from a need to simplify and abstract away the complexities associated with managing infrastructure in the cloud. The mission to allow developers the freedom to work on their actual application code instead of having to mess around with infrastructure has always been at the forefront of the enterprise and Serverless aims to do the same thing.

While a lot of people call ‘serverless’ a misnomer since traditional servers do not magically disappear and are very much a part of Serverless technology, for all practical purposes, there are no servers to worry about.

This is because, unlike traditional applications, Serverless apps are deployed via Cloud functions that are basically single-purpose, event-triggered microservices. The advantage Serverless has over traditional VM-based computing that most cloud providers offer is the fact that while the latter’s customers are paying for resource capacity, the former’s customers only pay for actual resources used.

For example, with VM-based computing, it’s pretty much a given that you need to reserve and pay for extra capacity in anticipation of a spike in usage, Serverless on the other hand is literally Pay-As-You-Go.

What is Hardware Disaggregation?

Before we can explain the concept of hardware disaggregation let’s quickly recap traditional servers or monolithic servers as they are called. This is basically the same server setup or architecture that we’ve been using since the 1990s with the individual parts becoming more powerful as we have progressed on into the future.

This server model is still used by most data centers where each server has a separate set of hardware devices like CPUs, RAM, and storage, that are all managed by an Operating System or similar hypervisor. The problem here is that Serverless doesn’t consume resources in the form of “servers” and auto-scaling techniques with traditional servers leave a lot to be desired.

This is where hardware disaggregation comes in and breaks down these monolithic servers pretty much in the same way microservices are used to break up monolithic applications. As opposed to having tightly coupled resources in the form of servers that hinder elastic resource provisioning, hardware disaggregation splits up resources by their functionality.

So, where you once had 100 Servers, each with their own memory, processors, and storage, now you have a separate network-enabled pool for memory, a separate one for storage, another for processing power, and so on and so forth. This allows for each resource to be efficiently allocated and managed on a granular level which is exactly what serverless needs.

True Serverless Data Centers

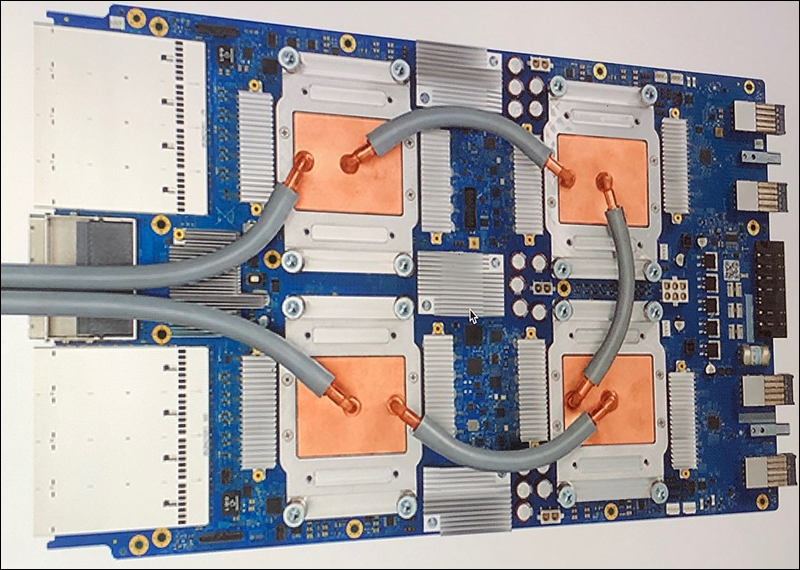

Now coming back to people calling Serverless computing a misnomer since there are traditional servers involved, disaggregated hardware could change that. We’re already seeing the benefits of disaggregated hardware in machine learning applications where a mix of computing platforms are required like GPUs, TPUs, and CPUs.

For the uninitiated, TPUs or Tensor Processing Units (TPUs) are Google’s application-specific integrated circuits (ASICs) that are used to accelerate machine learning and AI workloads.” While that may sound like a mouthful, TPUs are an excellent example of domain-specific or disaggregated hardware. Similarly, IPUs or Intelligence Processing Units by Graphcore are another great example.

Yet another example is Microsoft’s Brainwave which uses FPGA chips to process images to train neural networks. Unlike traditional CPUs, these chips can be reprogrammed to the desired functionality as per the customer’s requirements and don’t rely on the traditional monolithic server architecture. In addition to being able to repeatedly access a machine’s host memory much faster than a CPU, FPGAs can also directly access a machine’s CPU cache along with the RAM.

In an ideal “disaggregated” setting, what you would have is separate pools of each kind of processor be it CPUs, GPUs, TPUs, IPUs, or FPGAs, all connected to a high-speed network that would allow users to expand their accelerator pools as required.

True Serverless Data Centers

While Serverless computing is a great concept that aims to allow the flexible and efficient allocation of resources, app developers are still hindered by the rigidity of traditional servers and aren’t exactly free to allocate the truly optimum number of resources for their applications.

With Serverless Data Centers what we hope to see in the future are independent pools of resources that can be allocated based solely on requirements and scaled to the exact amount that an application uses.

In case you missed:

- China launches world’s first AI-powered underwater data centre!

- This computer uses human brain cells and runs on Dopamine!

- Schrödinger’s Cat Just Made Quantum Computers 160x More Reliable!

- Scientists establish two-way Lucid Dream communication!

- Humans Just Achieved Teleportation? Clickbait vs. Facts

- CDs are making a comeback, on a petabyte-scale capacity!

- Could the Future of Communication Be Holographic?

- Quantum War Tech: DRDO and IIT help India take the lead!

- Samsung’s new Android XR Headset all set to crush Apple’s Vision Pro

- Neuralink Blindsight and Gennaris Bionic eye, the future of ophthalmology?