Awareness about deepfake videos has spread but few know the menace that audio deepfake can create in our lives writes Satyen K. Bordoloi.

Actor Val Kilmer had lost his voice to throat cancer in 2014. That would be a problem in reprising his role of Iceman for Top Gun: Maverick. Though he did not have to speak much, his few lines were vital. In the end, Kilmer did and did not speak, for it was an AI model that synthesized his voice after analysing hours of his archival voice.

Ruth Card did not know anything about such audio deepfakes when she got a call from a lawyer saying her grandson Brandon was arrested and needed cash for bail. As the Washington Post reported, the lawyer put him on the phone and the panicked grandson confirmed the same. So, Ruth began pulling out cash to help her grandchild until one of the bank managers put a stop to this when he told her that she was probably being scammed.

She was one of the lucky ones. As AI technology is making eerily accurate, and cheap deep fake voices, imposter scams are rising and as per the Washington Post report, were the second most popular scam in the US with over 36,000 incidents of swindling by someone posing as family and friends. What is alarming is that 5,100 of those amounting to over $11 million in losses, happened over calls.

Awareness of deepfake videos is good but what people do not realise is that audio deepfake are equally disruptive.

How easy it is to fake voices

ChatGPT has brought generative Artificial Intelligence into the public conversation. What the ‘generative’ here means is exactly that: it generates. The human-like answers that ChatGPT gives are because it literally generates those responses from the billions of texts that were scrounged from the net and fed to its neural network allowing the machine to ‘learn’ how humans interact. Every time you ask it something, it generates these responses based upon these learnings in its system.

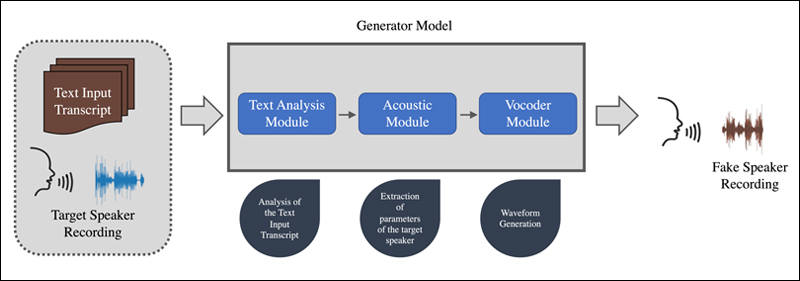

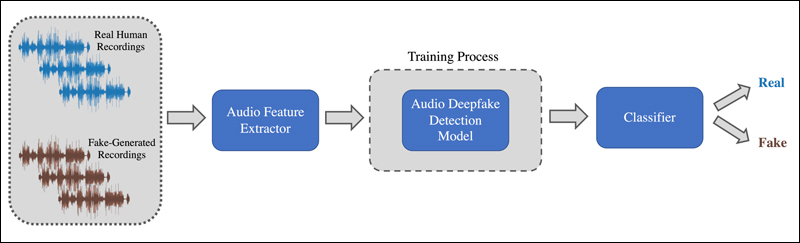

Audio deepfake works in exactly the same way. The AI system was given hours of voices from Val Kilmer’s previous movies and interviews and it learnt the speech patterns, intonations, modulations, pauses, pitch and pith etc. from that to generate multiple voices that sounded like Val Kilmer. The film crew finally chose one that best suited their needs.

How audio deepfakes work for scammers is easier than this. Let us assume you are the target of the scamsters. They will scan through your social media feeds to find your voice samples. Then they feed these voice samples into any of the many dozen deepfake speech makers like Listnr, Overdub, Resemble, Respeecher, Eleven Labs, Play.ht etc. and voila, within minutes they have your voice synthesised. It may not be as accurate as say Val Kilmer’s in Maverick, but the scamsters have the advantage of using them over the phone where quality loss caused by the transmission of sound through spotty networks is expected.

What these AI voice synthesizers do is analyse every aspect of your voice to find out what makes your voice unique; from accent, regional differences, quirks, patterns of loudness and pauses etc. These are mapped out and cloned within minutes. A 30-second clip of your voice is enough to make a good clone that can pass off as you on the phone.

India and deep fakes

When it comes to technology, Indians take giant leaps. We may not have created most of the digital technologies in the world or adopted them quickly enough. But once we take to it, the technologies explode in our country. We leap generations and despite a late start, catch up real fast.

Take the case of internet and phone scams. We started out late, but it has now become a sort of cottage industry in our country with rural India taking the lead, as the Netflix series called Jamtara based on real incidents shows. Every single one of us knows people who have fallen for such internet and phone scams.

Yet, surprisingly, so far, India has not reported such audio deepfake scams. That is not because the scamsters are not aware of it that has been going on for years across the world, but it has to do with technology. AI voice analysis and synthesis technology were created and used in the US first. Hence, the voices and accents analysed and the technology developed for were western people and in the English language. These AI systems have thus become very good at synthesising western, English voices. When it comes to Indian voices, the system enters a minefield.

First are the endless number of languages in India. Then there are the thousands of dialects and atop them are dozens of thousands of variations in them. Go to any bazaar in India and you will not only find more languages spoken than in any corner of the world, but the number of dialects for any single language would be more than say the entire nation of the USA. India is not only full of people but full of everything, especially languages and dialects. A majority of Indians know at least two languages. A good number of them at least three or more e.g. I am conversant in five and know a few more besides. And when we speak, we mix up languages unconsciously. Finding a person’s samples in these many languages and having the AI system synthesise them all and then speak in the exact same mix of languages, would be a nightmare for scamsters.

Yet, it is not a problem that generative AI cannot solve. It is just a matter of at most a few years before voice synthesizers in multiple Indian languages would be available to download and use from the web like one can in the US. This, however, would need the focus of Indian AI researchers and developers. We need to tweak generative AI to this conundrum of voices and noises that India is.

Once that happens, on one side the good uses of such technology would increase e.g., with chatbots, by content creators, for announcements in railway stations or airports, by media organisations to automate news reading, by filmmakers etc. On the other hand, you can rest assured our parents and grandparents would start getting calls that you and I are in danger and need money immediately for rescue.

It is hence important to know of them and make others aware of this cascade of scams looming over us. This could not only save us a lot of headaches but also lakhs and crores in money.

In case you missed:

- Bots to Robots: Google’s Quest to Give AI a Body (and Maybe a Sense of Humour)

- A Data Centre on the Moon – From Sci-Fi to Necessity

- Why Elon Musk is Jealous of India’s UPI (And Why It’s Terrifyingly Fragile)

- In Meta’s Automated Ad Creation Plan, A Fully Transformed Ad World

- Rufus & Metis Tell Tales of Amazon’s Delayed AI Entry

- How Does AI Think? Or Does It? New Research Finds Shocking Answers

- Rise of Generative AI in India: Trends & Opportunities

- What are Text-to-Video Models in AI and How They are Changing the World

- And Then There Were None: The Case of Vanishing Mobile SD Card Slots

- The Rise of Personal AI Assistants: Jarvis to ‘Agent Smith’