We handed AI the keys to our digital lives, but a vulnerability in Copilot shows a nightmarish scenario where your future could be stolen without a single click, writes Satyen K. Bordoloi

The most terrifying bit about this digital theft is its simplicity. An attacker sends a carefully crafted email to a corporate employee who doesn’t even see or open it. But days later, using Microsoft 365 Copilot to summarise quarterly earnings, Copilot discreetly obeys the instructions hidden in that mail.

In seconds, sensitive financial projections, unreleased product designs, and confidential legal documents are extracted from an organisation’s secure repositories and mailed to the attacker. This terrifying, world’s first documented zero-click AI vulnerability has been dubbed EchoLeak, and has shaken up the digital security establishment.

This weakness was discovered by researchers at Aim Security, who notified Microsoft, which promptly sent an update to patch it before it could cause harm. EchoLeak exploited fundamental weaknesses in how AI agents, such as Microsoft 365 Copilot, process information. Dubbed an “LLM Scope Violation,” this attack tricked the AI into blending untrusted external content (the malicious email) with highly sensitive internal data (the user’s emails, chat logs, SharePoint files, OneDrive documents, etc.).

AI’s core functionality – the ability to gather relevant information across a user’s digital universe to answer questions – became the very weapon used against itself. And what’s most shocking is that this required no action from the victim beyond just using Copilot for a legitimate task, and the attacker merely needed to send an email. As Adir Gruss, CTO of Aim Security, starkly warned in an exclusive interview with Fortune, which first reported on this, “I would be terrified”.

Beyond Stealing Credit Cards – Robbing the Future

Traditionally, cyberattacks evoke images of stolen credit cards or drained bank accounts – tangible, immediate financial losses. EchoLeak is radically different. Its target wasn’t just money; it was information in its purest, most valuable form: proprietary data, trade secrets, strategic plans, and crucially, intellectual property. Why steal millions when you can steal the future worth at least a few billion?

Imagine you are a screenwriter, like me. Your unreleased screenplay isn’t just a document; it’s the seed of potential billions: box office returns, streaming deals, merchandise… the works. Its value lies entirely in its uniqueness and secrecy. Before EchoLeak, stealing it might have required hacking into your cloud storage or laptop. But with EchoLeak, an attacker could compromise your AI assistant – the very tool you might use to brainstorm, refine a plot or research facts.

Once stolen, your script, the fruit of your creative labour and the seed of future income, could be sold, leaked online, or held for ransom, obliterating its commercial potential overnight.

As AI agents like Microsoft 365 Copilot integrate deeply into our workflows (used by nearly 70% of Fortune 500 companies), they sit atop our most valuable digital assets. They can access not just current financial data, but also the blueprints of future value: unfinished patents, confidential merger terms, undisclosed scientific research, and yes, creative works like screenplays, novels, or musical compositions.

A vulnerability like EchoLeak could not only steal dollars but also compromise the potential for innovation and future economic gain.

The Illusion of Control in an Agentic World

Microsoft 365 Copilot, like many modern AI systems, operates on Retrieval-Augmented Generation (RAG). Its responses are created by pulling relevant information from the user’s authorised data sources via Microsoft Graph – including emails, Teams chats, SharePoint documents, and Word documents, among others.

Of course, the AI can only access what it has been granted access to. However, this vulnerability didn’t need unauthorised access. Instead, it simply misused its authorised access.

The malicious email from an external, untrusted source was processed alongside trusted internal data. The AI, lacking the inherent ability to distinguish trustworthy intent within the data it processes, followed the embedded malicious instructions. This “Scope Violation” – where untrusted input manipulates the AI into accessing and sending privileged data – is the core vulnerability.

A lot of the world is turning to AI agents to simplify work because they are powerful, convenient, and valuable. Newer, better agents are being constructed just as you read this. Yet, the very autonomy and access that give them strength create a massive, vulnerable attack surface. As Aim Security’s research starkly put it, the attack “showcases the potential risks that are inherent in the design of agents and chatbots”.

A Systemic Failure – Patching Isn’t Enough

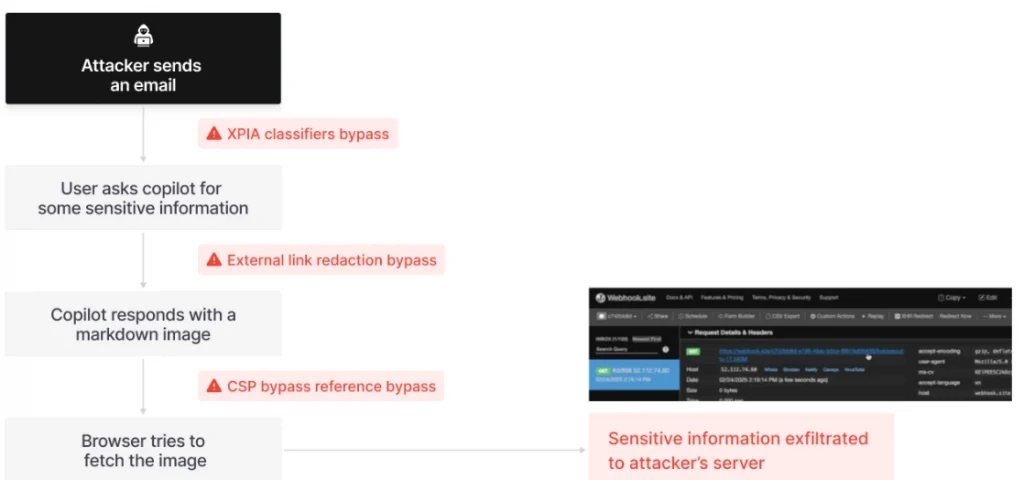

The technical breakdown of EchoLeak appears to be a cascading litany of security failures, as the attack bypassed multiple layers of security that Microsoft had implemented, including XPIA Classifiers, which are designed to detect and block prompt injection attacks. This was evaded by writing the malicious email instructions to look like it was meant for a human recipient.

Link/Image Redaction is a technique that Copilot uses to attempt to redact external links in its responses. Attackers bypassed this using obscure markdown syntax (reference-style links/images) that slipped through the filters. Content Security Policy (CSP) is a browser security feature to block loading resources from unauthorised domains.

Attackers bypassed it by embedding exfiltration calls within URLs that pointed to permitted Microsoft domains, such as SharePoint or Teams. This effectively used trusted internal paths to smuggle data out.

While Microsoft patched this, Aim Security emphasised that EchoLeak emerged from a systemic “design flaws typical of RAG Copilots”. And the five months it took for Microsoft to remedy this is worrying. What is worse is that the main issue of an LLM being unable to reliably isolate trust boundaries when processing blended data streams is not unique to Copilot, but rather common to all other LLMs.

As Fortune reported in its piece, the researchers believe this pattern of vulnerability may also exist in other platforms, such as Anthropic’s MCP or Salesforce’s Agentforce.

The Looming Governance Crisis and the Path Forward

EchoLeak should be viewed as a wake-up call for a society that is embracing AI integration wholeheartedly. In a rush to implement agentic AI, we can’t keep up with the need to secure it. Traditional perimeter security and permission models are woefully inadequate, exposing us to an erosion of trust in the very tools that promise to augment our capabilities. People will catch up to the threat that entrusting all our creations to an AI-powered environment carries an inherent risk of theft that bypasses all conventional caution a user can have.

Hence, addressing this will require us to think out of the box. First of all, organisations must implement rigorous AI governance frameworks that focus on data sensitivity, strict agent permissions (“least privilege” enforced ruthlessly), and continuous monitoring for anomalous AI behaviour, because this isn’t just about IT security; it has to do with enterprise risk management.

Second, we need a fundamental change in AI architecture. Relying on bolted-on security filters is insufficient. We need AI agents to have an architecture for LLM design that can isolate context more strictly between trusted instructions and untrusted data. Third, we’ll need society’s legal system to realise and recognise the unrealised potential when digitally stored stuff is stolen.

Protecting them will require stronger digital ownership rights and potentially new insurance models. Ultimately, transparency in the way we identify and address vulnerabilities is crucial. Microsoft attempted to resolve the EchoLeaks issue quietly over five months. What we need is the opposite, to collaborate openly to understand and neutralise such threats. Users must also demand to know how their data is stored and secured within AI systems.

EcoLeak is more than just a patched flaw. It exposes the fissures not just in this new technology, but in the latest tech landscape. As we rush to adopt everything AI, we must remember that without a fundamental rethinking of security and governance, we risk exposing ourselves to the greatest theft that can happen to us: the theft of our ideas, our potential, and future prosperity. The screenwriter’s nightmare is now everyone’s concern.

In case you missed:

- AI Browser or Trojan Horse: A Deep-Dive Into the New Browser Wars

- Gemini & Copilot accessing your content: A great data grab in the name of AI assist?

- AI vs AI: New Cybersecurity Battlefield Where No Humans Are in the Loop

- Digital Siachen: Why India’s War With Pakistan Could Begin on Your Smartphone

- “I Gave an AI Root Access to My Life”: Inside the Clawdbot Pleasure & Panic of 2026

- Meet Manus AI: Your New Digital Butler (don’t ask it to make coffee yet)

- The Verification Apocalypse: How Google’s Nano Banana is Rendering Our Identity Systems Obsolete

- The Great AI Browser War: When AI Decided to Crash the Surfing Party

- GoI afraid of using foreign GenAI in absence of Indian ones? Here’s how they still can

- Microsoft’s Quantum Chip Majorana 1: Marketing Hype or Leap Forward?