Felista Gor explores a cutting-edge technology that allows disabled people to reach beyond their physical limits…

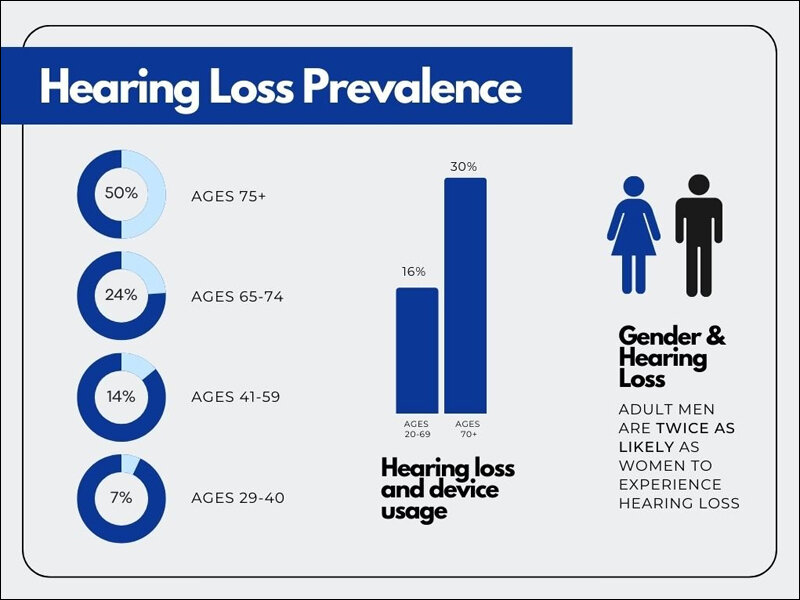

According to the World Health Organization, about 1.5 billion people are functionally deaf globally. The number is expected to rise to about 2.5 billion by 2050. This category of people uses the sense of sight as their primary communication tool. Sign language interpreters are therefore vital for this community, as they help them to have control over their lives and allow them to understand what others are saying.

Most of the hearing-impaired individuals are quite old, often over 65 years of age. At this age, it is challenging to learn a new language compared to a young person or a teenager. Moreover, those who rely on sign language interpreters are limited by the number and availability of these individuals. Hearing impaired individuals are therefore forced to routinely seek out new ways to navigate a hearing person’s life.

In search of solutions, researchers have sought answers in technology. So far, technology has led to the development of hearing aids and ear implants. Hearing aids are small electronic devices that you wear in or behind the ear to make sounds louder so that people with hearing difficulties can clearly listen, communicate, and participate in conversations. Hearing aids are quite efficient, as they have also been found to assist even the most inactive ear drums. On the other hand, ear implants have cured many patients with hearing loss, thus eliminating the eventuality of deafness. Some tech companies have also come up with software solutions that can be accessed via a smartphone and that recognize the speech of a speaker and then display whatever has been said.

Sadly, with over one billion people globally with hearing impairment, not all of them can be cured, let alone afford hearing aids or ear implants. However, the good news is that with the rising research that is focused on new technologies like artificial intelligence, companies have produced an innovation known as smart glasses.

How do smart glasses work?

Smart glasses attempt to bring the wireless connectivity and imaging we enjoy on our home computers and smart phones into the frames and lenses of our eyewear. The hearing-impaired individuals do not need to keep relying solely on lip-reading as the smart glasses will allow them to see what the other person is saying through their lenses. They give the deaf or hard-of-hearing people a heads-up display of live, real-time subtitles of their conversation, right in front of their eyes. The glasses can also allow them to rewind the chat and read it again.

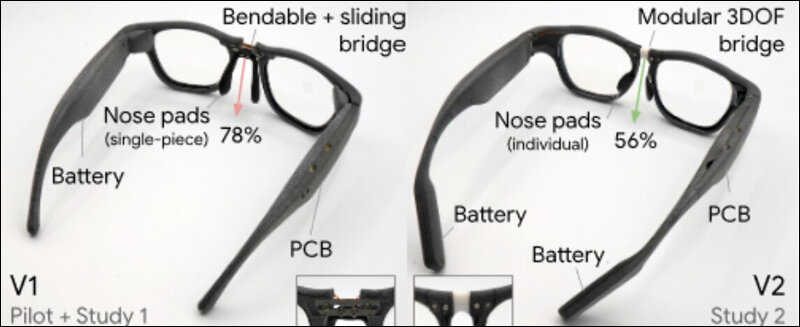

Google was the first company to develop a augmented reality smart glass for the deaf and hard of hearing. The smart glasses from Google are powered by low-power microcontroller architecture, which allows them to function for 15 hours a day before needing a recharge. The architecture is located on the right 3D-printed frame. The system communicates via Bluetooth and uses a smartphone’s microphone and a communication protocol to write out the words and sounds.

(Image Credit: Element 14)

A startup company called XRAI has also developed XRAI glasses that are specifically for the deaf and hard of hearing. Dan Scarfe noticed earlier during a family gathering that his 97-year-old grandfather at times just sat in silence because he found it difficult to talk with the family. The CEO of XRAI, Dan Scarfe, then created a way in which the deaf can see live subtitles of conversations through smart glasses to enable the hard-of-hearing not to feel left out during conversations.

The smart glasses use augmented reality and are tethered to a smart phone application comprised of artificial intelligence-driven software that gives a person more of a personal assistant as it reminds you of things you have forgotten. The smart glasses take an audio feed from the microphone on the glasses and send it to the phone. The phone processes all the required information and creates graphics to be displayed. It then creates and displays a speech transcription from nearby people using Alexa’s transcription service from Amazon and Nreal software. The app is also able to translate nine different languages in near-real time. The basic live captioning app is free to download, with the assistant and translation services paid for through a subscription.

The bottom line

The earlier solutions for the deaf and hard of hearing were quite inconvenient. However, with smart glasses, their limitations can be overcome. For example, the wearers of the smart glass do not have to maintain eye contact with the speaker as was the norm or constantly check the smart phone to respond on time. The smart glasses are light and discreet enough to be comfortable. They also can change the positioning, color, and size of the captions to suit their preferences.

Deaf and hard of hearing people have so much to gain from smart glasses. Additionally, modern technology keeps evolving, and there is still plenty of room for innovations that will make life better for them.

In case you missed:

- None Found

1 Comment

Great piece Felista. It’s good to know of such inventions!