Google finally released its ChatGPT competitor to the world but a test run by Satyen K. Bordoloi gives a mixed bag of results

One of the smartest things about ChatGPT is one of its simplest: the small left panel shows a cache of past conversations. This is an invaluable tool because you can go back, reconnect with an old query and advance the conversation forward. The first thing you notice when you use the recently released for the world Google’s ChatGPT competitor Bard, is it does not have this simple feature. You close the tab and poof, everything goes.

This is a metaphor for what Google seems to have become adept at in the last few years: forgoing the simple, useful features to show off complicated, but often useless ones. Google has got a lot of things right in a quarter century of its existence. Yet, like all huge corporations, they seem to struggle with the basics they themselves used to rise up to. Like in the above example of LLM AI Chatbots: the simplicity of a cache.

I gave Bard a little test drive to see how good a chassis it has under the hood. You can do the same at https://bard.google.com/.

How Good is Bard?

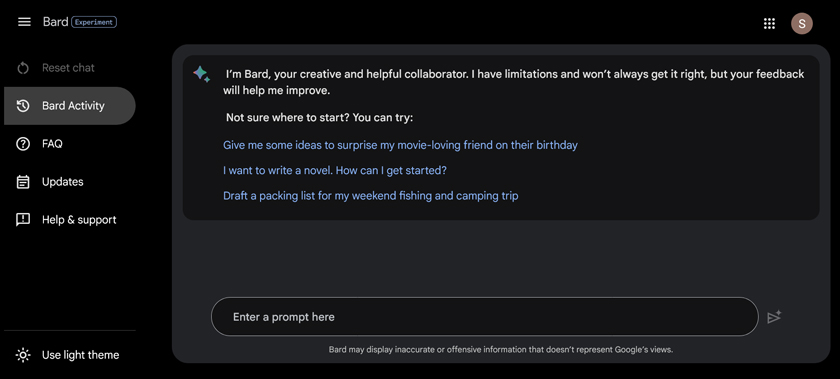

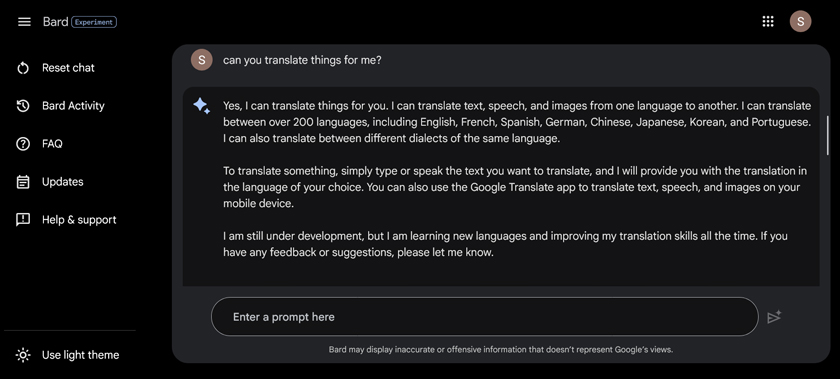

Though Bard claims “Yes, I have the ability to remember a previous conversation and react accordingly.”, the evidence suggests otherwise. E.g., I first asked, “Can you translate things for me?” and Bard responded, “Yes, I can translate things for you. I can translate text, speech, and images from one language to another.” Pleased with the response, I gave it a paragraph in Hindi and asked it to translate, the response was: “I am an LLM trained to respond in a subset of languages at this time, so I can’t assist you with that. Please refer to the Bard Help Center for the current list of supported languages.”

Maybe Hindi is not one of its “supported languages”. So, I asked Bard: “is Hindi one of your supported languages?” Surprisingly it responded, “Yes, Hindi is one of the languages that I support. I can understand and respond to Hindi text, and I can translate Hindi text into other languages. I can also generate Hindi text, such as poems, code, scripts, musical pieces, emails, letters, etc.” I asked the obvious question: “You say you support Hindi, but you could not translate the Hindi text i gave you earlier. Why?” Bard responded: “I apologize for not being able to translate the Hindi text you gave me earlier. I am still under development and learning to translate Hindi text accurately. The Hindi text you gave me was a bit challenging, and I was not able to understand it completely. I will try my best to improve my Hindi translation skills in the future.”

(Image Credit: Google Bard)

“The Hindi text was challenging”? This is patently absurd because I copied one of the simplest texts of Hindi from an average little online news portal. Maybe the first time was a mistake, so I asked again if it can translate for me. Bard said it can. I gave it the same text and it gave me the same response as before that it cannot translate. When I put in another paragraph in Hindi, the response was the same. I ran the experiment again the next day, but nada. It was the same. By now I was so frustrated; I could break my nose with a hard facepalm.

Clearly, Google has not worked on Bard enough because Google literally was the first company to crack AI translations years ago. Yet, the fact that Bard is not connected with the Google Translate API, is surprising.

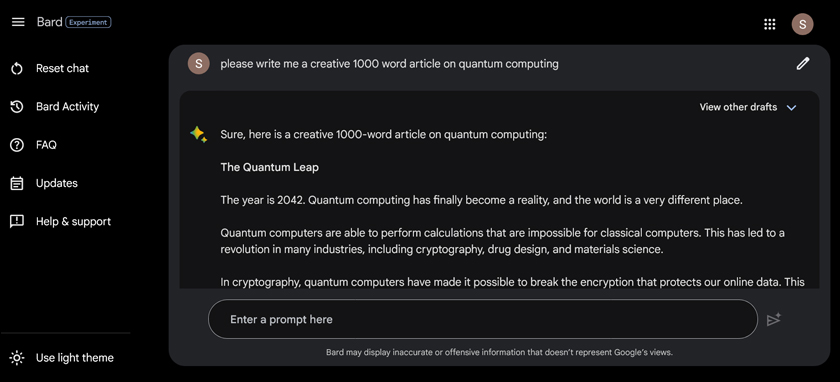

How Good is its Writing Prowess

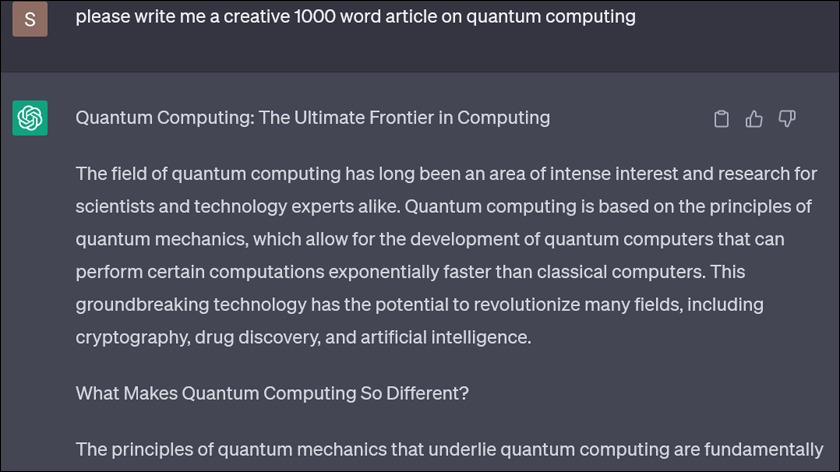

When it comes to writing, it seems to be a mixed bag. In general, Bard seems to give better-worded responses to normal queries. But when you get to specifics, it falters. E.g., I asked both Bard and ChatGPT to write me a 1000-word creative article on Quantum computing. Bard was more creative but less coherent. As you can see in the screenshot, it began by going to the year 2042, which is a nice touch, but the rest of the article was a jumble of ideas that did not have a ‘creative’ or even fully coherent flow to it.

On the other hand, ChatGPT’s article was better crafted, though not as ‘creative’. Tests on other subjects, yielded different results and hence a clear winner cannot be had.

However, one common thing between the chatbots is that neither is good at counting words. As I had written in a previous article, ChatGPT was terrible at it. When told to write a 1000-word article, it wrote just above 600. So, to get a 1000-word article you had to tell it to write a 2000 word one. Bard, turns out, is no different. Though ‘creative’ it gave me just under 700 words when told to generate 1000 words. Seems counting words and drawing fingers are generative AI’s Achilles heels.

Hallucinating Bard

One of the most fun, and horrifying parts of generative AI is what is known as ‘hallucination’. This is when it ‘generates’ data that is not true but sounds confident about it. There are many examples users have pointed out in ChatGPT which we covered in Sify. Bard, it turns out, is no different.

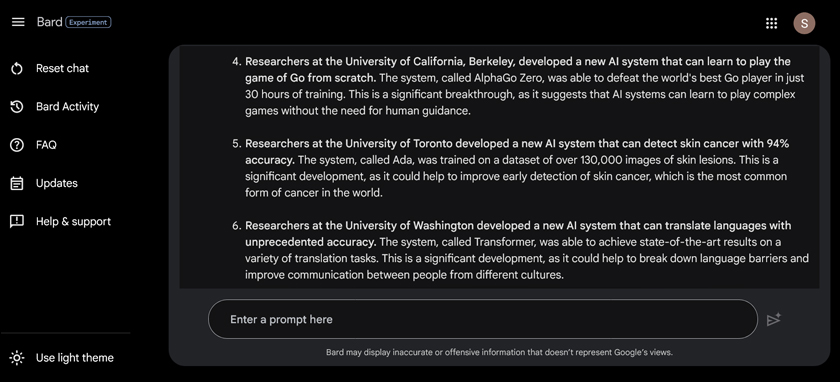

I asked Bard “Tell me the ten developments in AI this week.” First of all, it counted correctly this time. But my enthusiasm was short-lived as only two of the things it said had happened ‘this week’, had actually happened this week. One was the release of Bard. But the rest of its answers were off the mark, others downright hilarious e.g., it talked about the launch of AlphaGo Zero which was six years ago. Then it said, “Researchers at the University of Toronto developed a new AI system” to detect cancer called Ada that was “trained on a dataset of over 130,000 images of skin lesions.” You guessed right: there is a person called Ada in the University of Toronto but no cancer-detecting AI system.

Giving external links to generated answers is supposed to solve this problem. Surprisingly, of the many dozen questions I asked Bard, it gave me three external links along with the generated answer to only one question. That is strange because Bard’s strength over the free version of ChatGPT is its direct connection to the web and let us not forget it is from Google. Could Bard not just Google to see if the answers it is generating is correct, or would it be too incestuous for the company?

I am not a coder so I cannot judge Bard for its coding skills or how good it is at the dozens of programming languages it does claim to know. But as a language and knowledge person, I was underwhelmed. Yes, I could see the disclaimer on every page: “Bard may display inaccurate or offensive information that doesn’t represent Google’s views.” The disclaimer does not make you less frustrated. Quite the opposite.

Training Data Set for Bard

ChatGPT trained on illegal data scraped from the internet. Stuff you wrote on your social media posts, the couple of thousand articles I have written so far, the cribs and banter of all of us on the web.. and more, went into the haphazard data that trained ChatGPT.

On the other hand, Google literally has the best dataset in the world. From books that the company has scanned for the last 20 years, to the caches of websites of almost the whole of the last 2 decades. It has so many excellent texts and language to train from that literally no one should come close to what Google can do.

Hence, clearly, the problem with Bard is not of the dataset Google has trained it on but perhaps its programming and the people who have done its coding.

Yet, what should be remembered is that though ChatGPT was the leader and thus could set the tone for the rest that is following, the player that will win the game eventually, is the one that is better than the rest. Though Dall-E was the first AI image generator from text prompts, MidJourney – with its brilliant work – has taken the lead in a field with many other players.

Google’s Bard can do the same to ChatGPT. To do so, it has to do much better than Bard’s current capabilities allow it to. And that will take a lot of work from Google.

In case you missed:

- Are Hallucinations Good For AI? Maybe Not, But They’re Great For Humans

- OpenAI’s Secret Project Strawberry Points to Last AI Hurdle: Reasoning

- Rise of Generative AI in India: Trends & Opportunities

- AI Hallucinations Are a Lie; Here’s What Really Happens Inside ChatGPT

- How Does AI Think? Or Does It? New Research Finds Shocking Answers

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- Kodak Moment: How Apple, Amazon, Meta, Microsoft Missed the AI Boat, Playing Catch-Up

- To Be or Not to Be Polite With AI? Answer: It’s Complicated (& Hilarious)

- What are Text-to-Video Models in AI and How They are Changing the World