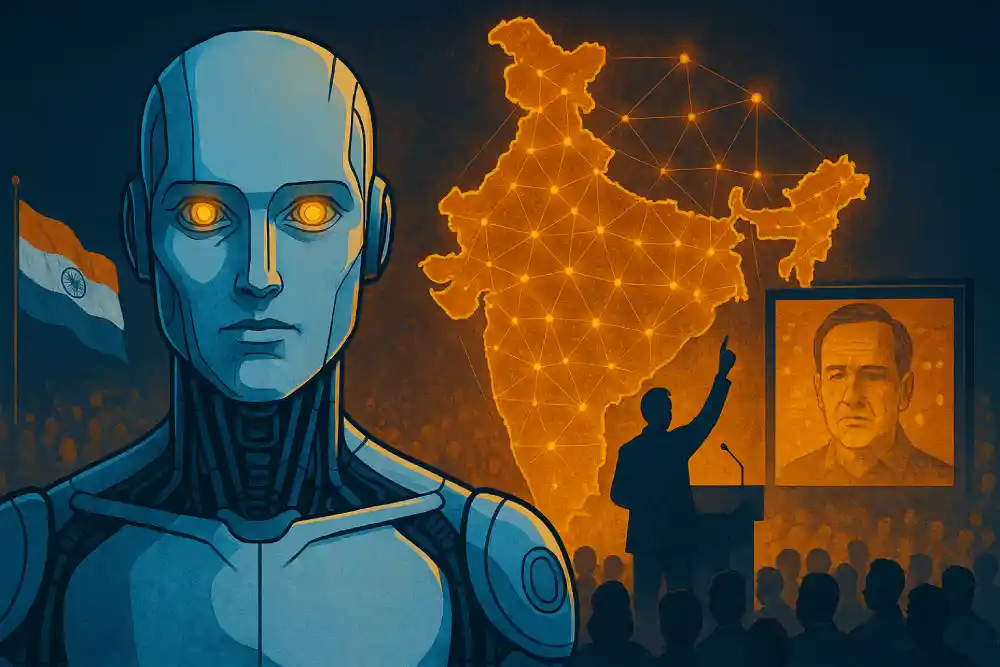

AI deepfakes are spreading faster than India’s election safeguards can keep up, and experts warn this may be the most vulnerable election cycle yet.

Artificial intelligence has entered electoral politics at a speed that regulators simply did not anticipate. Over the past year, several high-profile incidents worldwide have shown how AI tools can manufacture persuasive political misinformation with almost no cost or effort.

In early 2024, voters in the United States received a fake robocall mimicking President Joe Biden’s voice, an incident confirmed by the New Hampshire Attorney General and widely reported by The New York Times. Around the same time, Slovakia suffered a major disinformation surge when a deepfake audio clip circulated just before its elections, allegedly influencing voter sentiment; both Reuters and BBC News covered the fallout extensively.

These events highlight a core problem: governments are still using analog-era safeguards against digital-era threats.

India’s Online Landscape Amplifies the Deepfake Threat

India faces a sharper version of this challenge simply because of its digital landscape and scale. With more than 820 million internet users and some of the world’s most active WhatsApp and Instagram populations, India provides fertile ground for rapid misinformation spread. The fact that political outreach in India heavily relies on short videos, forwards, and influencer-style messaging makes the environment even more vulnerable.

Last year, multiple Indian celebrities publicly complained after AI-generated political endorsements using their voices or likenesses went viral; these cases were reported by the Indian Express and Hindustan Times. Deepfake videos of political figures speaking in regional languages also appeared across election-adjacent social media groups, prompting the Election Commission to issue advisories, but no binding protocol exists yet.

The sheer diversity of India’s linguistic ecosystem means deepfake creators can tailor misinformation in dozens of languages within minutes, and voters often share content within trusted community networks without questioning authenticity. This creates a risk profile that is fundamentally different from Western countries.

While the European Union introduced new transparency rules (that require political AI content to be labeled) as part of its AI Act, they do not fully address rapid misinformation bursts during live electoral cycles. In the United States, the Federal Communications Commission recently banned AI-generated robocalls, but enforcement remains uncertain since tools that create realistic synthetic voices are widely available online.

MIT Technology Review noted that detection techniques are improving, yet they remain unreliable against state-of-the-art generative models. The problem: AI can create DeepFake videos almost instantly, while detection requires forensic analysis (not instant). During politically sensitive moments like final-phase campaigning or the last days of polls, this can be especially problematic as a fake video can quickly swing undecided voters before fact-checkers even have a chance to respond.

AI Outpaces India’s Election Safeguards

India’s rules around AI and election content are still taking shape, and that leaves plenty of room for problems. The Election Commission does provide advisories on how parties and candidates should behave online, and the IT Ministry has cautioned platforms about allowing synthetic political material to spread. But we are yet to adopt stronger measures like compulsory watermarking for AI-generated media, fast-track removal procedures, or special restrictions on synthetic campaign messages during the election window. At the same time, political groups have started using AI creatively in their outreach.

News reports in The Hindu and Mint noted recent cases where candidates relied on AI-produced voice replicas to address voters in different languages. These examples weren’t deceptive, but they show how quickly artificial content is blending into everyday political communication. When genuine and fabricated material can look and sound identical, the line between permissible campaigning and misleading manipulation becomes far harder to police.

International cybersecurity researchers are already warning that 2025–26 could be the first true “AI-manipulated election cycle.” Multiple US think tanks and independent digital forensics labs have all issued assessments pointing to the same concern: generative AI has lowered the barrier for influence operations.

What once required coordinated teams, budgets, and advanced editing tools can now be executed by a single motivated individual using a smartphone. Malware-style distribution techniques, where AI-generated content mutates slightly to bypass platform filters, are beginning to appear as well, according to Google’s Threat Analysis Group reports.

When combined with India’s highly networked political communication channels, the risk is not just that misinformation will spread, but that it will evolve too quickly for existing moderation systems to contain.

AI-driven elections

In the end, the real issue isn’t whether AI-generated fakes will play a role in elections; that part is already certain. The concern is how much damage they can do to public confidence in the process.

India’s electoral environment is especially delicate because of its enormous online population, intense social-media activity, and increasingly fragmented political discourse. All of these factors give synthetic videos and audio a chance to influence opinion long before verification reaches ordinary voters.

Analysts cited by Reuters and researchers at the Carnegie Endowment say that minor policy guidance won’t be enough; they recommend a broader set of safeguards, including built-in identifiers for AI outputs, faster escalation channels with platforms during campaign cycles, third-party audits of powerful models, and awareness programs for citizens.

Without measures like these, misinformation networks powered by generative AI could spread more quickly and persuasively than the institutions meant to contain them. India’s situation may therefore foreshadow challenges other democracies will face as artificial media becomes routine.

In case you missed:

- $70 for cocaine, $30 for weed, and $50 for ayahuasca, that’s what it costs to get ChatGPT high!

- FraudGPT & WormGPT: Making Cybercrime Cheap & Effortless!

- AI in 2025: The Year Machines Got a Little Too Smart (But Not Smart Enough)

- India’s first Aatmanirbhar semiconductor chip is finally here!

- Moltbook: AI agents now have their own Reddit, and humans aren’t allowed to post!

- 4 New Chip Fabs and a Rallying Cry from The Red Fort!

- Spiralism: The Cult-Like Belief System Emerging from AI

- India, iPhones, China & Trump: The Foxconn Story!

- India Brings AI to Its Aatmanirbhar Weather Forecasting

- Scientists use AI to create Artificial Bacteriophages that Target and Kill Superbugs!