South Korea’s AI Basic Act can be looked at as both a bold first step in AI governance and also a move that could stifle AI development, writes Satyen K. Bordoloi

In a hugely polarised world that we live in, if there is one thing about which there is near-perfect agreement, it is that everyone, besides the tech-bros of the world, wants AI to be regulated. Yet every attempt to do so has failed. Until now. Because, recently South Korea became the first country to pass a comprehensive law to regulate AI.

On January 22, a law came into force in South Korea called the Framework Act on the Development of Artificial Intelligence and Establishment of Trust: often called the AI Basic Act. Seoul calls it the world’s first comprehensive legal framework for AI. More than just a shift in domestic policy, this legislation could also be interpreted as a strategic move by a major technology economy to influence global norms and laws and secure its position in the future of AI.

This decisive movement is perhaps meant for South Korea to make its way into the “AI G3” alongside the US and China, and to seize the first-mover advantage in setting global standards. This, even as advanced economies like those in the EU roll out AI legislation in stages, and the US struggles with legislative gridlock under its new style of government.

A Law Aiming to be both Innovative & Trustworthy

The AI Basic Act is designed for two ends. First, the law aims to accelerate technological advancement, and second, it also wishes to establish essential safety guardrails. Instead of focusing solely on regulations that limit, the Act unifies 19 separate AI bills into one framework to foster a cohesive approach that enthusiastically promotes AI development through support for startups, talent acquisition, research funding, and more.

Its core is an “innovation-first” approach that allows companies to develop and deploy AI systems without prior government approval, thereby avoiding regulatory chokepoints that stifle innovation.

Despite this, it also attempts to regulate high-impact AI systems. The law defines this category as AI whose failure could harm life, property, or fundamental rights, or systems that require immense computational power to train (specifically those exceeding 10²⁶ floating-point operations). These systems are typically found in 11 specified high-risk sectors, including healthcare, employment, lending, energy infrastructure, and government services.

For those operating such high-impact AI systems, the Act sets out a range of duties to guarantee safety and accountability via proactively assessing potential effects on people’s rights, having meaningful human involvement in critical decision making processes, to notify people when they are interacting with or subjected to decisions being made by high-impact AI, clearly labelling AI-generated content including images, video and audio to combat deepfakes and disinformation, giving people the right to request explanations for AI-generated outcomes and so on.

Key Criticisms and Implementation Concerns

So far, despite its forward-looking nature, the AI Basic Act has been met with anxiety, particularly among South Korea’s domestic tech industry and start-ups. And the most common critique is that it is vague and lacks specifics. Important concepts, such as “high-impact AI”, are only loosely defined, meaning that firms may struggle to determine whether their products are subject to the most rigorous requirements under the law.

Local reports suggest that many local AI startups have yet to begin the formal compliance process, given this ambiguous guidance. Others disclose that product launches and updates are being pushed back amid this uncertainty.

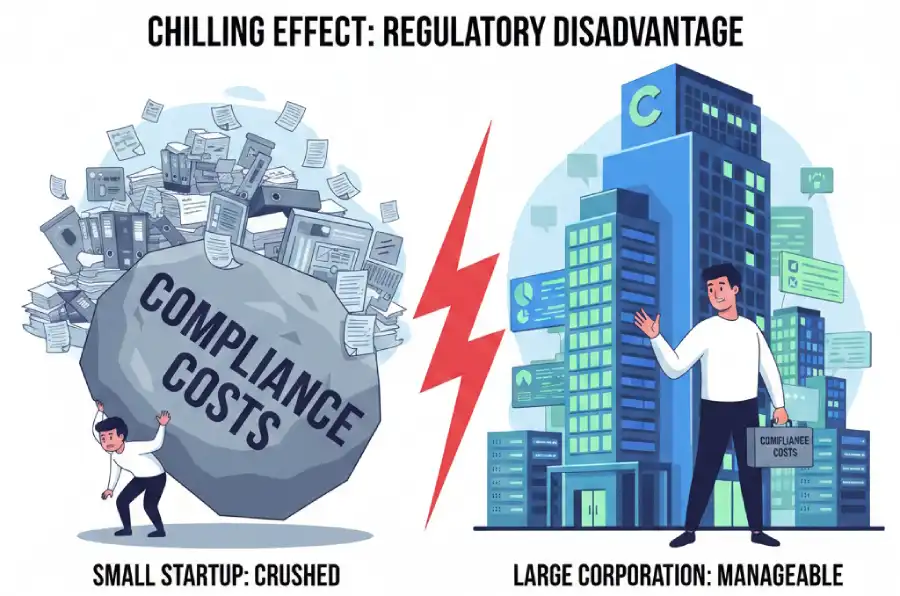

One of the biggest concerns about the law is that the cost and complexity of compliance will be particularly burdensome for smaller firms and startups, which, by definition, have fewer resources. Detractors say this may produce a “chilling effect,” in which companies become too risk-averse and hesitate to experiment and rapidly iterate on AI systems, which are essential to the lifeblood of innovation.

Then comes South Korea’s digital landscape, which is dominated by several competing agencies: the Ministry of Science and ICT (MSIT), the Personal Information Protection Commission (PIPC), and the Korea Fair Trade Commission (KFTC). These have historically exhibited both cooperative and contradictory behaviours. The AI Basic Act designates MSIT as the main regulator, but other organisations, such as KFTC, have already released their own competition policies in the AI field, and PIPC is tasked with proactively scrutinising AI services for data protection infringements. That means the two layers of jurisdiction will be cooked up, and the admins might end up creating conflicting rules, causing the cost for compliance to go up and result in confusion among businesses, not to mention how start-ups will take the biggest brunt of that. Without tight agency coordination, it could lead to excessive enforcement and a “straitjacket effect” that chills innovation in the leading-edge AI industry.

The law also has provisions for administrative fines of up to 30 million won (about $20,800) for violations such as failing to conduct a preliminary review or to inform users. To facilitate the transition, the government has granted a one-year grace period during which these penalties will not be enforced. This is welcome. Yet, civic groups continue to worry that with no meaningful independent oversight or penalties, the ethical aspirations of the law could turn out to be just rhetoric.

South Korea’s Regulatory Framework in the Global Arena

Despite fears and criticism, this approach stands out in a world where AI governance is largely fragmented and rapidly evolving. The EU’s approach is largely precautionary and rights-based, with strict tiered regulation tied to risk levels. US laws are sectoral, with Colorado and California leading the way, and executive orders adding to the mess, though it is mostly light touch and decentralised. China, on the other hand, has focused on administrative regulations aimed at sovereignty and stability, which means it demands greater content control from AI companies.

This shows that South Korea’s approach is unique, as it seeks to be both a promoter and a regulator of AI. Globally, a clear convergence is emerging in the regulation of AI in high-stakes sectors such as employment, finance, and healthcare, with common expectations for risk assessments, oversight, and transparency. South Korea’s law thus places it within this trend but executes it through a distinctly national lens, prioritising its economic competitiveness alongside ethical safeguards.

South Korea’s AI Basic Act is a landmark achievement in global AI governance, demonstrating a sophisticated understanding that leadership in the AI era requires setting the rules, not just mastering the technology. And by acting first, South Korea is trying to position itself as a laboratory for regulatory experimentation that could lead to the export of its governance model.

Yet, the law is likely “not enough” in many ways. Firstly, it is challenged by Korea’s complex regulatory bureaucracy, which means that effective implementation will depend on unprecedented coordination among MSIT, the KFTC, the PIPC, and other bodies to avoid a tangled web of compliance that stifles innovation. Secondly, the success of its “innovation-first” pillar is based on clarifying the vague provisions that paralyse startups. Guidance during the grace period will be critical.

Ultimately, the AI Basic Act is just the first salvo fired in the long battle to regulate AI and its companies. And the true test will not be the speed with which it is enacted, but how adaptive it is and how responsive the governments are. For the act to work, South Korea’s regulators must show that they can refine the law quickly enough to both regulate and foster a climate of trust, so AI breakthroughs can emerge despite the law. And if it does, that will be its true success, for then it will have proven that regulation is not anathema to AI innovation, but perhaps a prerequisite for it.

In case you missed:

- The Growing Push for Transparency in AI Energy Consumption

- From Generics to Genius: The AI Revolution Reshaping Indian Pharma

- How Can Indian AI Startups Access Global VC Funds?

- How India Can Effectively Fight Trump’s Tariffs With AI

- Deep Impact: How Cheap AI like DeepSeek Could Upend Capitalism

- The Verification Apocalypse: How Google’s Nano Banana is Rendering Our Identity Systems Obsolete

- A Small LLM K2 Think from the UAE Challenges Global Giants

- The B2B AI Revolution: How Enterprise AI Startups Make Money While Consumer AI Grabs Headlines

- The Major Threat to India’s AI War Capability: Lack of Indigenous AI

- The Cheaper Than Laptop Robot Revolution: How China’s Unitree Just Redefined Our Future