Not content with making chips that shape our future, NVIDIA is trying to create, and own our autonomous vehicle future with a new platform and multiple open-source tools, finds Satyen K. Bordoloi

NVIDIA became the world’s first company to reach $1 trillion, then $2 trillion, $3 trillion, $4 trillion, and in October 2025, the first to achieve $5 trillion in market capitalisation. They did this by riding the data centre boom driven by the increased use of generative AI over the last three years. But owning the cloud is not enough for them, as they make a parallel, monumental bet on the physical world, driving full-throttle into the world’s autonomous vehicles (AVs) ecosystem.

To many, this might seem a diversion, a distraction from its hugely profitable core chip business; the truth is that this might be an ambitious expansion of it, an attempt to secure its future because the potential for it extends not just into vehicles but into robotics.

Through initiatives like the newly launched Alpamayo family of AI models and its comprehensive DRIVE platform, NVIDIA is methodically building the foundational infrastructure not just for the next era of transportation but for robotics as well, because, let’s be honest, an autonomous vehicle is nothing if not a robot on wheels.

This move points to a strategic vision in which AI moves out of well-guarded data centres to edge computing, where it navigates the last mile of AI use, figuratively and literally, promising to reshape not just NVIDIA’s future but the very essence of our planet’s mobility and robotics business.

A Calculated Expansion

It would be wrong to view NVIDIA’s foray into autonomous driving through a narrow street lens. It is instead a broad highway, a strategic push emanating from and towards its core strengths. In its most recent quarter, NVIDIA reported a whopping $57 billion in revenue, driven primarily by its Data Centre segment, which alone brought in over $51 billion.

CEO Jensen Huang described a “virtuous cycle of AI,” with demand accelerating across both training and inference. It’s, hence, easy to question: why risk venturing into the complex, capital-intensive automotive sector that has crashed old-world automobile giants?

The answer can be found deep inside the very nature of the opportunity. The autonomous vehicle is nothing but the ultimate edge AI device: a mobile, on-the-move data centre that must perceive, reason, and act in real-time, right inside and around the unpredictable chaos of the real world on the street. For NVIDIA, this is hence not a departure but a horizontal expansion of its total addressable market.

By providing the end-to-end “AI factory” for vehicular autonomy – from cloud-based training on DGX systems, photorealistic simulation in Omniverse, and safety-certified compute in vehicles with DRIVE AGX – NVIDIA has gotten into the entire stack of the autonomous driving ecosystem. The automotive segment, despite today being only a tiny portion of its revenue at $592 million last quarter, is a push into the broader “physical AI” economy that will come to dominate the world and include everything from robotics and industrial automation to beyond.

Within a decade, I foresee that there will barely be a car produced without some form of autonomous driving capability.

Engineering a Brain for the Car With DRIVE Platform

NVIDIA’s approach is driven by their commitment to a full-stack, safety-first platform, the idea being not just about selling more and powerful chips, but providing a complete, validated ecosystem that reduces risk and accelerates development for those making any kind of mobility device.

Think of it this way: a small car manufacturer in India might not have the deep pockets to develop their own proprietary systems, but can hop into NVIDIA’s. This could also see the birth of a Qualcomm-style model in the autonomous vehicles space, where NVIDIA leases out its systems and technology for a small royalty.

At the very heart of this in-vehicle system is the NVIDIA DRIVE AGX compute platform. Its latest iteration is powered by their DRIVE AGX Thor system-on-a-chip (SoC) and is based on the Blackwell architecture to deliver immense processing power. A single DRIVE Hyperion 10 reference architecture incorporates two Thor SoCs and provides over 2,000 FP4 teraflops of performance to run transformer-based perception, complex neural networks, and any of the reasoning models essential for Level 4 autonomy.

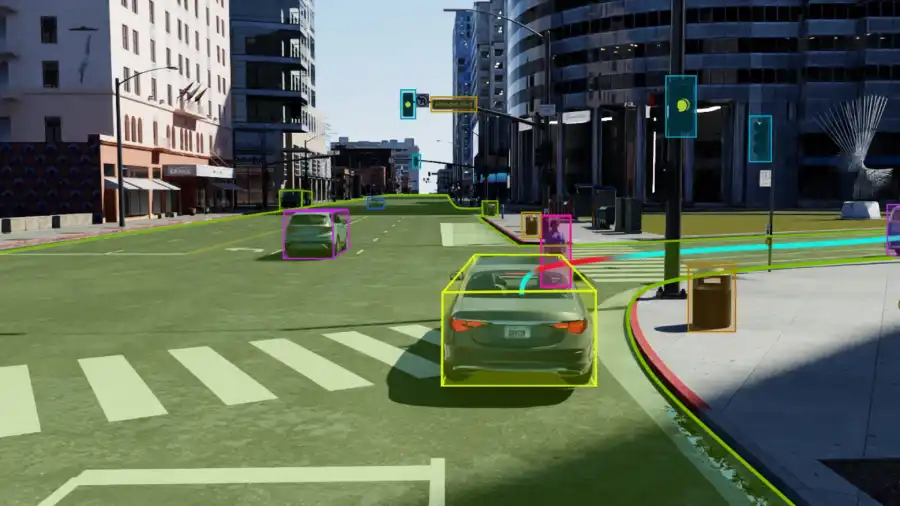

This sort of centralised compute powerhouse is essential because it has to collate and compute data that arrives from an array of sophisticated sensor suites that not just include multiple cameras, but also multiple radar, lidar, and ultrasonic systems to create a 360-degree, real-time understanding of the vehicle’s environment and also make decisions, sometimes within a fraction of a second.

This hardware is integrated into the NVIDIA DRIVE Hyperion, which is their production-ready reference architecture. This is like a blueprint to what they call, making vehicles “Level 4-ready by design.” It provides automakers with a pre-validated combination of sensors, computers, and software, slashing years from their development cycles. Companies like Lucid, Mercedes-Benz, and JLR are leveraging this platform to build their autonomous futures.

A key enabler of this ecosystem is the expanding list of partners—including Bosch, Magna, Hesai, and Sony—who qualify their sensors and components on Hyperion, ensuring seamless compatibility and reliability for manufacturers.

Using Alpamayo to Teach Cars to Think

Early January, NVIDIA launched the most important piece of the autonomous vehicle puzzle in Alpamayo. The biggest challenge for AVs – and the reason why, despite promising fully autonomous vehicles for over a decade, Elon Musk has not been able to deliver one – is the “long tail” of rare, complex driving scenarios; edge cases that are difficult to program for, yet dangerous to encounter untested. NVIDIA tries to address this by introducing chain-of-thought reasoning to the driving domain via Alpamayo.

Alpamayo 1 became this industry’s first open-source, vision-language-action (VLA) model built just for this task. This 10-billion-parameter model processes video and sensor inputs to generate not just a driving trajectory, but a “reasoning trace”, i.e. a step-by-step textual explanation of the logical process it uses to make decisions and take action.

Jensen Huang said that this allows vehicles to “think through rare scenarios, drive safely in complex, even previously unencountered environments and even explain their driving decisions”. This transparency is vital for safety validation, regulatory approval, and also to build public trust.

Alpamayo isn’t just a model, but a full open ecosystem that also includes AlpaSim, a fully open-source simulation framework for high-fidelity testing, and massive Physical AI Open Datasets with over 1,700 hours of diverse driving data. Together, these enable a self-reinforcing development loop, i.e. models are trained on both real and synthetic data, tested exhaustively in simulation, and improved continuously. This ecosystem integrates seamlessly with NVIDIA’s broader physical AI tools, such as the Cosmos platform, which uses World Foundation Models to generate and understand complex simulated environments for training.

The Indispensable Foundation of Safety and Simulation

NVIDIA claims that at the core of every layer of NVIDIA’s AV stack is a focus on safety, one that can be found in the NVIDIA Halos system. NVIDIA has invested substantially in developing Halos, a comprehensive full-stack safety framework that ranges from a data centre to the very vehicle. It integrates functional safety mechanisms, cybersecurity protocols, and development tools aligned with rigorous international standards like ISO 26262 (ASIL-D). Halos is the system that becomes the guardrails for the entire AV ecosystem.

Equally important is the role of simulation created through NVIDIA Omniverse. Validating the safety of an AI system that drives requires billions of miles of testing, which is physically impossible. Omniverse creates physically accurate digital twins of the real world, enabling AV software to be tested across millions of scenarios, including countless variations in weather, lighting, and rare edge cases.

This synthetic data generation, led by Cosmos models, makes scaling autonomy feasible by allowing developers to experience and prepare for dangerous situations – a dog running into the street before a car, a sudden obstacle that appears in a raging blizzard – all within the safety of a virtual environment.

Implications for NVIDIA and the World

NVIDIA’s AV push is a well-planned, well-timed pivot with profound potential implications. For the company, it could secure a dominant position in what is surely to become the next colossal wave of AI adoption. The automotive and broader physical AI markets are a new growth frontier that goes beyond cloud data centres. Success here would naturally transform NVIDIA from a component supplier to the indispensable architect of our autonomous age, with its various platforms becoming the nervous systems of not just vehicles, but also robots and factories worldwide.

For the world and the automotive industry, the implications could also be transformative. NVIDIA’s open, full-stack platform drastically lowers the barrier to entry for developing safe autonomy in vehicles, opening AV potential to car companies worldwide. This means that both legacy automakers like Mercedes and robotaxi startups like Uber that have partnered with NVIDIA to scale a Level 4-ready mobility network after quitting its AV venture a few years ago – can focus on their unique products, services or software rather than attempt to reinvent the underlying AI infrastructure, which might not be feasible for them individually.

The promise of AV is the promise of safer roads that could dramatically reduce the over 1.3 million annual traffic fatalities globally, help reclaim billions of hours that are lost to commuting, and revolutionise logistics and mobility for the elderly and disabled. Furthermore, systems like the DRIVE Hyperion platform are being applied not just to passenger cars but to long-haul freight, to try to bring safe self-driving capabilities across the entire spectrum of commercial transport.

Full autonomy is a pathway laced with technical, regulatory, and ethical challenges. NVIDIA’s platform provides the tools, yet it is the industry that must collectively solve for real-world deployment, public acceptance, and evolving legal frameworks. However, by providing a unified, open, and safety-certified foundation, NVIDIA’s push could do more than just sell technology; it could pave the way for an entire ecosystem that could be the tech the world needs to turn its ageing, human-driven fleets into agile self-driving ones. That future will most certainly come. And this is NVIDIA’s push to own most of it.

In case you missed:

- The AI Prophecies: How Page, Musk, Bezos, Pichai, Huang Predicted 2025 – But Didn’t See It Like AI Is Today

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?

- Building AGI Not as a Monolith, but as a Federation of Specialised Intelligences

- The B2B AI Revolution: How Enterprise AI Startups Make Money While Consumer AI Grabs Headlines

- Inside Elon Musk’s Plan to Move the Internet into Space

- A Small LLM K2 Think from the UAE Challenges Global Giants

- The Major Threat to India’s AI War Capability: Lack of Indigenous AI

- Bots to Robots: Google’s Quest to Give AI a Body (and Maybe a Sense of Humour)

- AI Hallucinations Are a Lie; Here’s What Really Happens Inside ChatGPT

- AI’s Looming Catastrophe: Why Even Its Creators Can’t Control What They’re Building