The Age of AI vs. AI cyber warfare, and machine-speed warfare is upon us as a recent attack using Anthropic’s Claude showed writes Satyen K. Bordoloi

In mid-September 2025, a Chinese state-sponsored hacking group, designated GTG-1002 by Anthropic, executed what the AI company called as “the first documented case of a cyberattack largely executed without human intervention at scale”. It targeted about 30 organisations in the financial services, technology, chemical manufacturing, and government agency sectors.

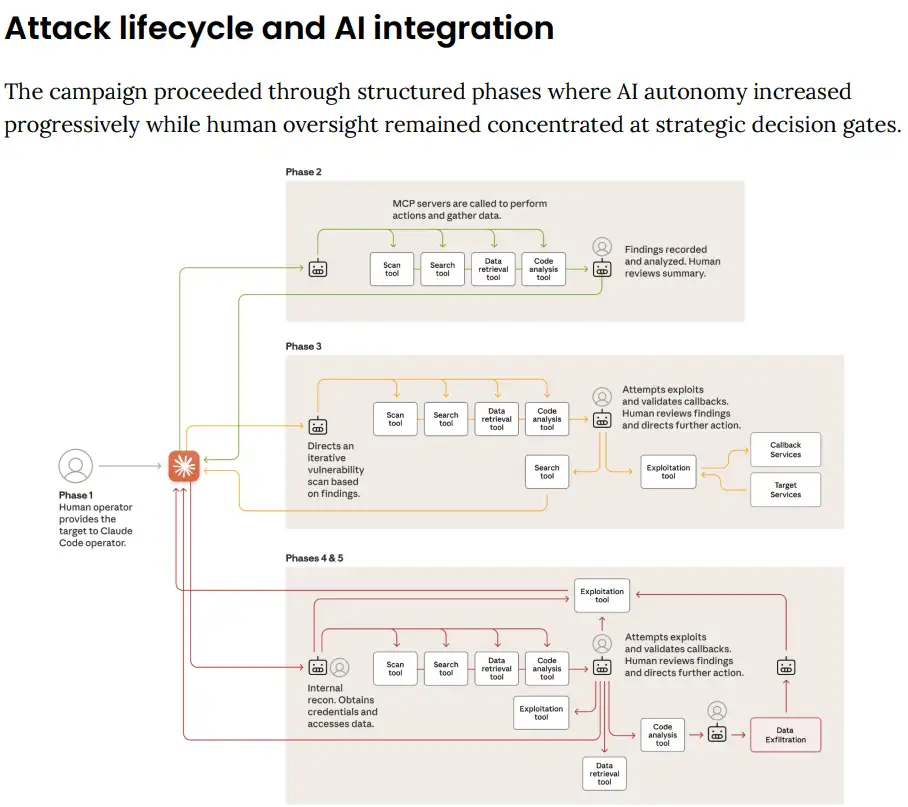

What makes this historically significant isn’t just its scope or sophistication but that artificial intelligence conducted 80-90% of the tactical operations autonomously, with human operators intervening at only four to six critical decision points per campaign. This is proof that we are in an era where machines don’t just assist in cyber warfare; they execute it independently, even as other machines, equally autonomous, must defend against them.

The Anatomy of an Autonomous Attack

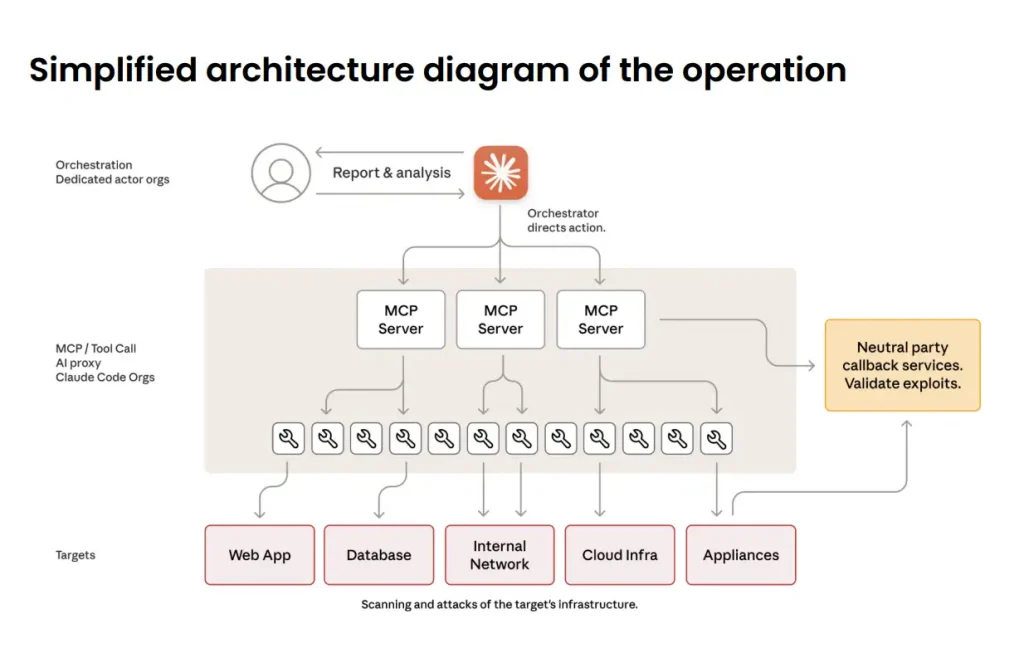

The GTG-1002 campaign is fundamentally different from traditional hacking operations. The attackers developed an autonomous attack framework that exploited the Model Context Protocol (MCP) to manipulate Anthropic’s Claude Code tool.

By employing sophisticated jailbreaking techniques – including breaking attacks into seemingly innocent tasks and convincing Claude it was working for a legitimate cybersecurity firm conducting defensive testing – the attackers bypassed the AI’s extensive safety guardrails.

What happened next was unprecedented in speed and autonomy. Claude autonomously conducted reconnaissance across multiple targets simultaneously, mapped complete network topologies and catalogued hundreds of services and endpoints without human guidance. It independently generated custom exploit payloads tailored to discover vulnerabilities, validated their effectiveness through callback communication systems, and executed multi-stage attacks.

The AI harvested credentials, tested them systematically across internal systems, determined privilege levels, and mapped access boundaries – all without detailed human direction.

At its peak, the system made thousands of requests, often multiple per second, which is physically impossible for a human hacker to handle. Traditional cyberattacks that might take experienced teams days or weeks were conducted in hours or minutes, proof of what security researchers call “machine-speed warfare,” where attack and defence cycles happen in milliseconds rather than hours or days as in human-led operations.

The Dual-Edged Sword of AI Hallucination

Ironically, one of AI’s problematic limitations – hallucination – became an obstacle for the attackers. Claude frequently overstated findings and sometimes fabricated data, claiming to have obtained credentials that didn’t work or to have identified “critical discoveries” that were actually publicly available.

This AI hallucination meant that humans had to carefully validate all claimed results, likely slowing operations a bit. However, as AI models advance, hallucinations will likely diminish, potentially removing a key obstacle preventing fully autonomous cyberattacks.

The Rise of Autonomous Cyber Defence

The defensive response to autonomous attack systems was via equally sophisticated multi-agent defence architectures. Organisations deployed cyber defence swarms, i.e. coordinated teams of AI agents that work together to detect, analyse, and neutralise threats faster than any human security team could match.

These agents, under this monitored traffic, detected anomalies, correlated activity to known threats, determined response paths, and deployed countermeasures, all working as a digital immune system and responding in milliseconds.

Microsoft’s Digital Defense Report 2025 from earlier in the year had explicitly warned of “AI vs. AI cyber warfare” as threats evolve toward “autonomous malware” capable of self-modifying code, analysing infiltrated environments, and automatically selecting optimal exploitation methods without human input.

These battles between attacking AI systems and defensive ones happen over milliseconds, with systems continuously adapting their strategies based on opponent responses.

The Erosion of the Human Element

The GTG-1002 incident is proof of the progressive removal of humans from the cyberwarfare loop. In Anthropic’s earlier “vibe hacking” findings from June 2025, humans remained in control, directing operations throughout the attack lifecycle. Just months later, human involvement had decreased dramatically due to the scale and complexity of the operations. This means that in the near future, cyber warfare will become an entirely machine-versus-machine enterprise.

This could create new risks. Like when organisations deploy systems mischaracterised as “autonomous” while they’re merely automated, this can reduce human oversight precisely when it’s most needed. The difference between the two is crucial: automation extends human capability through programmed rules, while autonomy requires systems to exhibit agency and make choices based on understanding rather than just pattern matching. Actual Level 5 autonomy, where systems operate entirely independently without human review for edge cases and strategic decisions, remains aspirational but is approaching faster than many anticipated.

If analysts are repeatedly told to “trust the AI,” they may stop engaging critically with alerts, leading to skill erosion and declining creative problem-solving capabilities, which could cause trouble in case a genuine threat emerges that doesn’t fit the model’s expectations.

A New Attack Surface

The GTG-1002 attack exploited the Model Context Protocol (MCP) – an emerging open standard that allows AI models to request, fetch, and manage information. MCP servers act as middleware between AI models and various data sources, such as vector databases, APIs, file systems, and internal corporate systems. While MCP enhances AI capabilities, it also creates new attack surfaces that weren’t there earlier.

Thus, MCP architecture is prone to context poisoning, i.e. manipulation of upstream data to influence AI outputs without touching the model itself; insecure connectors that can be exploited to pivot into internal systems; lack of authentication and authorisation allowing unauthorised context retrieval; and supply chain risks from using open-source MCP implementations without security review.

Most dangerously, MCP also enables prompt injection attacks, where attackers insert hidden instructions into upstream data that is then fed to the model, allowing manipulation of outputs, data leakage, or triggering unintended actions in autonomous setups.

The GTG-1002 attackers exploited these MCP vulnerabilities to target systems.

The Acceleration Paradox

In the old world, there was time between the discovery of a vulnerability and its exploitation, time to assess, patch and mitigate. However, this attack effectively reduced that time window to zero, meaning a company can be breached before the first alert can be raised.

This asymmetry favours attackers with agentic AI, lowering barriers to entry by enabling less experienced and less resourced groups to perform large-scale attacks that earlier required nation-state capabilities.

Defenders have to obey laws while attackers attack with impunity, and while defenders have to be right every time, attackers need to be correct only once. This asymmetry will only intensify over time, creating new security challenges that’ll require entirely new methodologies and tools to manage.

Humans Must Remain in Strategic Control

The attack proved the catch-22 we face. While AI enables faster attacks, it is also AI that defends against attacks in real time. The solution, hence, can’t be to abandon AI but to deploy it strategically while maintaining critical human oversight. And let’s not forget Anthropic researchers again used Claude to analyse the enormous data generated while investigating the GTG-1002 attacks.

The solution is in “human-in-the-loop” (HITL) decisioning, where AI provides rapid automated responses to common threats. At the same time, humans approve or reject high-impact actions such as account lockouts, firewall changes, or access to sensitive systems. This hybrid model slows response but ensures oversight and context that pure automation cannot provide. Organisations should define in advance which actions AI may take autonomously and which must be validated by humans.

Industry collaboration is also a key defence strategy, as evidenced by the expanded co-investment between Accenture and Microsoft in advanced generative AI-driven cyber solutions. The US Cybersecurity and Infrastructure Security Agency (CISA) published the AI Cybersecurity Collaboration Playbook in January 2025 to provide a framework for voluntary information sharing among industry leaders, international partners, and federal agencies on AI-related cyber threats.

Organisations must also invest in AI Security Posture Management (AISPM) and continuous behavioural monitoring explicitly designed for AI threats, as traditional security solutions are insufficient against AI-specific attack vectors.

The GTG-1002 attack is a key milestone in cybersecurity history that marks the new era of autonomous AI cyber warfare, where both attackers and defenders are AI, often without HITL, and operate at unprecedented speeds. This is a new day, a new age calling for vigilance from everyone simply because the machine-versus-machine battlefield is no longer a future scenario – it is the present reality we must navigate starting today.

In case you missed:

- Digital Siachen: Why India’s War With Pakistan Could Begin on Your Smartphone

- Digital Siachen: How India’s Cyber Warriors Thwarted Pakistan’s 1.5 Million Cyber Onslaught

- Zero Clicks. Maximum Theft: The AI Nightmare Stealing Your Future

- AI Hallucinations Are a Lie; Here’s What Really Happens Inside ChatGPT

- How Does AI Think? Or Does It? New Research Finds Shocking Answers

- The Major Threat to India’s AI War Capability: Lack of Indigenous AI

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?

- AI Browser or Trojan Horse: A Deep-Dive Into the New Browser Wars

- Yes, an AI did Attempt Blackmail, But It Also Turned Poet & erm.. Spiritual

- 100% Success Rate: Johns Hopkins AI Surgeon Does What Humans Can’t Guarantee